13 March 2018: There is an updated post on this subject – https://www.sqlinthewild.co.za/index.php/2018/03/13/revisiting-catch-all-queries/

The query form that I refer to as ‘Catch-all’ typically results from search screens in the application where the user may enter any one (or more) of a number of optional parameters. One of the more common ways for such a query to be written in SQL is with multiple predicates in the where clause of the form (WHERE SomeColumn = @SomeVariable OR @SomeVariable IN NULL)

Now this does work, the problem is that it works fairly inefficiently and, on large tables, can result in really poor query performance. I’m going to take a look at why that is the case and what alternatives there are.

Erland Sommarskog has written on this as well, and in a lot more detail than I’m going to. His article on dynamic search conditions is well worth reading, as are the rest of his articles.

A typical example of a ‘catch-all’ query would be this one, based off a table in the AdventureWorks database.

CREATE PROCEDURE SearchHistory (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL) AS SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory WHERE (ProductID = @Product Or @Product IS NULL) AND (ReferenceOrderID = @OrderID OR @OrderID Is NULL) AND (TransactionType = @TransactionType OR @TransactionType Is NULL) AND (Quantity = @Qty Or @Qty is null) GO

Now, let’s say that I run that query and pass values for the ProductID and the Transaction type. Let’s further say that there’s a nonclustered index (called idx_TranHistory_TranTypeProductID) on those two columns.

EXEC SearchHistory @Product = 978, @TransactionType = 'W'

Now this returns 52 rows out of 980000 that are in the table, so we’d expect that SQL would use an index seek operation on that index, followed by a bookmark lookup.

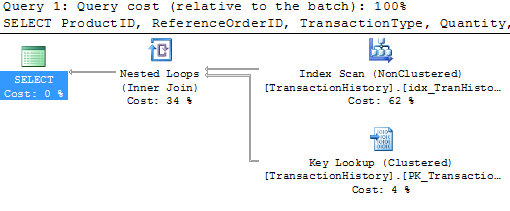

Nope. It’s using that index all right, but it’s doing a scan, not a seek. Ok, not great, but not bad. Let me try a different set of parameters

EXEC SearchHistory @Qty = 100

The plan’s exactly the same. No surprise, it was cached the first time and then reused. There’s a problem here though, the index that’s used is completely inappropriate and there’s a bookmark lookup that ran almost a million times. No wonder this execution took 3 seconds and 2,949,715 IOs to return 29 rows.

Ok, so let me try a different form of the catch-all query

CREATE PROCEDURE SearchHistory_Improved (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL) AS SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory WHERE (ProductID = CASE WHEN @Product IS NULL THEN ProductID ELSE @Product END) AND (ReferenceOrderID = CASE WHEN @OrderID IS NULL THEN ReferenceOrderID ELSE @OrderID END) AND (TransactionType = CASE WHEN @TransactionType IS NULL THEN TransactionType ELSE @TransactionType END) AND (Quantity = CASE WHEN @Qty IS NULL THEN Quantity ELSE @Qty END) GO

Let’s see what that does for the first test:

EXEC SearchHistory_Improved @Product = 978, @TransactionType = 'W'

Well that’s no better. Full blown table scan.

The problem with these types of queries is that there is no stable plan. The optimal plan differs completely depending on what paramters are passed. The optimiser can tell that and it plays safe. It creates plans that will always work. That’s (one of the reasons) why in the first example it was an index scan, not an index seek.

The downside of the safe plan is that it’s highly unlikely to be a good plan and, even if it is, it won’t be good for all possible combinations of plans.

So, how to handle this type of query? Well, there are typically two ways.

Recompile

This is only an option on SQL 2008. On 2008, if the query is specified with the OPTION (RECOMPILE) hint, then the optimiser knows it doesn’t have to worry about safe plans because the plan will never be reused. In fact, if I add that hint to the query in the first example, I get the expected index seek.

Dynamic SQL

The other option is to build up the query string dynamically, based on the parameters passed and then to use sp_executesql to run it. There are the ususal downsides to dynamic SQL but, it may be that the performance improvement is worth it.

CREATE PROCEDURE SearchHistory_Dynamic (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL) AS DECLARE @sSQL NVARCHAR(2000), @Where NVARCHAR(1000) = '' SET @sSQL = 'SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory ' IF @Product is not null SET @Where = @Where + 'AND ProductID = @_Product ' IF @OrderID is not null SET @Where = @Where + 'AND ReferenceOrderID = @_OrderID ' IF @TransactionType IS NOT NULL SET @Where = @Where + 'AND TransactionType = @_TransactionType ' IF @Qty IS NOT NULL SET @Where = @Where + 'AND Quantity = @_Qty ' IF LEN(@Where) > 0 SET @sSQL = @sSQL + 'WHERE ' + RIGHT(@Where, LEN(@Where)-3) EXEC sp_executesql @sSQL, N'@_Product int, @_OrderID int, @_TransactionType char(1), @_Qty int', @_Product = @Product, @_OrderID = @OrderID, @_TransactionType = @TransactionType, @_Qty = @Qty GO

Note that there’s no SQL injection vulnerability in this. The parameters are never concatenated into the string and the execution is parametrised.

Now each different set of parameters gets a different cached plan, optimal for that particular set of parameters.

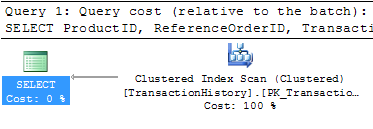

EXEC SearchHistory_Dynamic @Product = 978, @TransactionType = 'W' EXEC SearchHistory_Dynamic @Qty = 100

The first gets an index seek, the second a clustered index scan (because there’s no index on Quantity). Much better than the behaviour with the earlier non-dynamic versions.

G’morning Gail,

I just got referred in to this post from somewhere or other, and since I use a variety of NULL conditions, I was wondering how my preferred structure works, since I don’t see it above.

I often use this structure:

WHERE (CASE WHEN @ProductID IS NULL THEN 1

WHEN @ProductID = ProductID THEN 1

ELSE 0

END) = 1

More efficient, same, less efficient, or truly horrible?

When using multi-value parameters, I try to always bust the passed in string down to a table (variable or temp, depending on my mood), then join to the result.

One definite takeaway for me will be to use the OPTION(RECOMPILE) in any of my procs where I’m allowing a NULL parameter.

Same (I tested it on a forum post, not here).

It has the same performance effects as the WHERE (@ProductID IS NULL OR ProductID = @ProductID), however I don’t know if OPTION(RECOMPILE) will help that form, depends whether the optimiser can recognise the construct or not (also make sure you’re on 2008 SP2 or above before trying RECOMPILE)

Yo Gail.

Is there any reason that you do a check on the @where and then subtract the first AND. Instead of doing a @where with a static ‘WHERE 1 = 1 ‘ then add the clauses with AND’s.

I generally find it’s a little easier to debug because you are doing less string manipulation.

Cheers.

Transact Charlie.

Because I heartily dislike the WHERE 1=1 .. form in the where clause and I would never willingly use or promote code that contains it.

Wonderful article. Thanks for posting. Was exactly the help i needed.

This is very nice – BUT you can not use it in Table Valued Functions (TVF), since it uses an EXEC !!

Any hint on how to use dynamic WHERE in TVF ?

Nice Article 🙂

Gail – How might we modify this to use LIKE and IN? If I modify it as follows:

IF @firstname is not null

SET @Where = @Where + ‘AND OrderID LIKE (”@_OrderID%”) ‘

… PRINT sSQL produces:

WHERE firstname LIKE (‘@_OrderID%’)

… which looks and executes correct when run manually. However I receive no results from the stored proc when run. Any ideas?

Apologies – I screwed up the code in my previous post by trying to adapt it to your example. Please ignore and consider this instead:

Gail – How might we modify this to use LIKE and IN? If I modify it as follows:

IF @OrderID is not null

SET @Where = @Where + ‘AND OrderID LIKE (”@_OrderID%”) ‘

… PRINT sSQL produces:

WHERE OrderID LIKE (‘@_OrderID%’)

… which looks correct as far as I can tell. However I receive no results from the stored proc when run. Any ideas?

LIKE @Order + ”%”

Pingback: Case TSQL statement in WHERE clause causing scanning (ss2005)

Gail, in Erland Sommarskog’s blog that you reference above, he suggests that building the query including the parameterized WHERE clause in client-side code is perfectly acceptable. Any thoughts?

Perfectly valid, just make sure that it is parameterised correctly.

I showed it as a stored proc because I often encounter the ‘catch-all’ form in existing procs and have to tune it without making any changes to the client app.

Thank you for the great, simple, write up. I chose to use dynamic sql, which I have used before, but not in such a clean way. Another thing I like about doing the parameterized list is that it will often lead to shorter sql statements which is helpful when you have large queries that are approaching the 4000 NVARCHAR limit.

Thank you for a great article! It really helped me. I have a small query though. How much performance advantage, in terms of time, does the dynamic query score against the other methods?

I have a stored procedure that I need to select registers that have at least one parameter in the WHERE clause.

example:

CREATE PROCEDURE [dbo].[SPPeople]

(

@Name varchar(50) = null,

@Status1 char(1) = ‘0’,

@Status2 char(1) = ‘0’,

@Status3 char(1) = ‘0’

)

as

SELECT People.Name, People.Age

FROM People

WHERE

(People.Name = CASE WHEN @Name is null THEN People.Name ELSE @Name END) AND

( (People.Status1 = CASE WHEN @Status1 = ‘0’ THEN People.Status1 ELSE @Status1 END) OR

(People.Status2 = CASE WHEN @Status2 = ‘0’ THEN People.Status2 ELSE @Status2 END)

(People.Status3 = CASE WHEN @Status3 = ‘0’ THEN People.Status3 ELSE @Status3 END) )

——

If parameters @Status1 and @Status2 are equal ‘1’ the query should bring registers that necessarily have Status1 OR Status2 equal to ‘1’, not depending on Status3.

But the query above brings all registers when I mark @Status1 and @Status2 as ‘1’.

Can you post this question on one of the SQL forums please?

I am wrong in my taking this as “parameter sniffing” and would it help if a local variable hodling a copy of the parameter value be used in the T-SQL statements ?

It’s related to parameter sniffing, but it’s not parameter sniffing and local variables won’t suddenly give you a good plan. At best, they’ll give you a stable, bad plan.

If my catch-all only has 1 parameter, would it be better to create 2 additional procs and nest them in the first; i.e. only adding 1 extra condition based on a not null parameter? In the case I am referring to, they are either returning all rows in the set if the parameter is null, or filtered if the parameter is not null.

See https://www.sqlinthewild.co.za/index.php/2009/09/15/multiple-execution-paths/

Nice article. Like to see more of these articles..

Thank you, thank you, thank you! Great solution, and enough detail to understand what you’re getting at without getting too into the weeds.

Thank you Thank you Thank you

I had a consultant insist on no dynamic sql and all new queries were constructed using common table expressions. I am comparing performance between parameterized dynamic sql and cte’s because the results are being filtered on multiple delimited value lists as well as a general string search. Have you ever used cte’s to perform the dynamic filtering on delimited string lists? Wondering if this is sacrificing performance for principles. I reviewed the query plans and it seems that having to do the splits and exists whether a filter value is included or not is a bit more costly. Having said that, after initial execution, subsequent calls are much faster. Would appreciate your feedback. Thanks!

cte example:

proc (@fkid1 bigint, @valueslist1…, @valueslist2…, @srchtext…)

as…

with cte_filter1(value)

as (select value from dbo.split(@valueslist1, N’,’),

cte_filter2(value)

as (select value from dbo.split(@valueslist2, N’,’),

cte_results1 (id, fkid1, value, filtercolumn1, filtercolumn2)

as (select t.id, t.fkid1, t.value, t.filtercolumn1, t.filtercolum2

from dbo.table1 t

where t.fkid1 = @fkid1

and (

@valueslist1 is null

or exists(select f1.value from cte_filter1 f1

where f1.value = t.filtercolumn1))

and (@valueslist2 is null

or exists(select f2.value from cte_filter2 f2

where f2.value = t.filtercolumn2))

)

select id, fkid1, value, filtercolumn1, filtercolumn2

from cte_results1

where value like (‘%’ + @srchtext + ‘%’)

A CTE has no magic to do filtering like this. It’s just a named subquery. You’re not gaining or losing performance by using a CTE over a single query.

Second and onward executions are always faster, the initial has to cache both data and query plan. Generally ignore the first execution and look at performance for later ones.

Pingback: Resolved: How to compare Entity framework Any() with lower comparing objects - Daily Developer Blog