13 March 2018: There is an updated post on this subject – https://www.sqlinthewild.co.za/index.php/2018/03/13/revisiting-catch-all-queries/

The query form that I refer to as ‘Catch-all’ typically results from search screens in the application where the user may enter any one (or more) of a number of optional parameters. One of the more common ways for such a query to be written in SQL is with multiple predicates in the where clause of the form (WHERE SomeColumn = @SomeVariable OR @SomeVariable IN NULL)

Now this does work, the problem is that it works fairly inefficiently and, on large tables, can result in really poor query performance. I’m going to take a look at why that is the case and what alternatives there are.

Erland Sommarskog has written on this as well, and in a lot more detail than I’m going to. His article on dynamic search conditions is well worth reading, as are the rest of his articles.

A typical example of a ‘catch-all’ query would be this one, based off a table in the AdventureWorks database.

CREATE PROCEDURE SearchHistory (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL) AS SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory WHERE (ProductID = @Product Or @Product IS NULL) AND (ReferenceOrderID = @OrderID OR @OrderID Is NULL) AND (TransactionType = @TransactionType OR @TransactionType Is NULL) AND (Quantity = @Qty Or @Qty is null) GO

Now, let’s say that I run that query and pass values for the ProductID and the Transaction type. Let’s further say that there’s a nonclustered index (called idx_TranHistory_TranTypeProductID) on those two columns.

EXEC SearchHistory @Product = 978, @TransactionType = 'W'

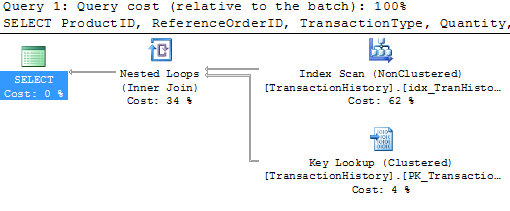

Now this returns 52 rows out of 980000 that are in the table, so we’d expect that SQL would use an index seek operation on that index, followed by a bookmark lookup.

Nope. It’s using that index all right, but it’s doing a scan, not a seek. Ok, not great, but not bad. Let me try a different set of parameters

EXEC SearchHistory @Qty = 100

The plan’s exactly the same. No surprise, it was cached the first time and then reused. There’s a problem here though, the index that’s used is completely inappropriate and there’s a bookmark lookup that ran almost a million times. No wonder this execution took 3 seconds and 2,949,715 IOs to return 29 rows.

Ok, so let me try a different form of the catch-all query

CREATE PROCEDURE SearchHistory_Improved (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL) AS SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory WHERE (ProductID = CASE WHEN @Product IS NULL THEN ProductID ELSE @Product END) AND (ReferenceOrderID = CASE WHEN @OrderID IS NULL THEN ReferenceOrderID ELSE @OrderID END) AND (TransactionType = CASE WHEN @TransactionType IS NULL THEN TransactionType ELSE @TransactionType END) AND (Quantity = CASE WHEN @Qty IS NULL THEN Quantity ELSE @Qty END) GO

Let’s see what that does for the first test:

EXEC SearchHistory_Improved @Product = 978, @TransactionType = 'W'

Well that’s no better. Full blown table scan.

The problem with these types of queries is that there is no stable plan. The optimal plan differs completely depending on what paramters are passed. The optimiser can tell that and it plays safe. It creates plans that will always work. That’s (one of the reasons) why in the first example it was an index scan, not an index seek.

The downside of the safe plan is that it’s highly unlikely to be a good plan and, even if it is, it won’t be good for all possible combinations of plans.

So, how to handle this type of query? Well, there are typically two ways.

Recompile

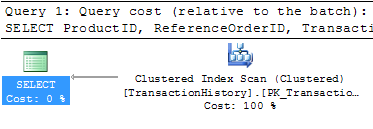

This is only an option on SQL 2008. On 2008, if the query is specified with the OPTION (RECOMPILE) hint, then the optimiser knows it doesn’t have to worry about safe plans because the plan will never be reused. In fact, if I add that hint to the query in the first example, I get the expected index seek.

Dynamic SQL

The other option is to build up the query string dynamically, based on the parameters passed and then to use sp_executesql to run it. There are the ususal downsides to dynamic SQL but, it may be that the performance improvement is worth it.

CREATE PROCEDURE SearchHistory_Dynamic (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL) AS DECLARE @sSQL NVARCHAR(2000), @Where NVARCHAR(1000) = '' SET @sSQL = 'SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory ' IF @Product is not null SET @Where = @Where + 'AND ProductID = @_Product ' IF @OrderID is not null SET @Where = @Where + 'AND ReferenceOrderID = @_OrderID ' IF @TransactionType IS NOT NULL SET @Where = @Where + 'AND TransactionType = @_TransactionType ' IF @Qty IS NOT NULL SET @Where = @Where + 'AND Quantity = @_Qty ' IF LEN(@Where) > 0 SET @sSQL = @sSQL + 'WHERE ' + RIGHT(@Where, LEN(@Where)-3) EXEC sp_executesql @sSQL, N'@_Product int, @_OrderID int, @_TransactionType char(1), @_Qty int', @_Product = @Product, @_OrderID = @OrderID, @_TransactionType = @TransactionType, @_Qty = @Qty GO

Note that there’s no SQL injection vulnerability in this. The parameters are never concatenated into the string and the execution is parametrised.

Now each different set of parameters gets a different cached plan, optimal for that particular set of parameters.

EXEC SearchHistory_Dynamic @Product = 978, @TransactionType = 'W' EXEC SearchHistory_Dynamic @Qty = 100

The first gets an index seek, the second a clustered index scan (because there’s no index on Quantity). Much better than the behaviour with the earlier non-dynamic versions.

Nice post Gail. I do have a question that I probably should already know the answer to, but I don’t so I’ll ask. How do you handle security in the dynamic sql option since it changes context and I typically would not grant any read rights to a user?

Great article, Gail! This was one of those things I had to learn the hard way when I was first getting started. It seemed so much easier to build one wider proc with a few extra parameters than to build several procs that did almost the same thing. I’ve since learned that the performance just isn’t there, which you’ve done a great job of illustrating.

I tend to use ‘prepared SQL’ whenever I have a search-type proc, and it seems to perform fairly well.

Good read indeed, it sometimes presents as a problem in SSRS reports as well, as users love to have so many parameters to choose from (NULL or multi-value, cascading parameters, …)

And people tend to not think too much when it is just “reports” vs production.

Jack: EXECUTE AS on the stored procedure.

So one user who only has read rights on the specific tables that are used in the dynamic SQL (and no other rights at all, including login rights) and then the procedure definition would be

CREATE PROCEDURE SearchHistory_Dynamic

WITH EXECUTE AS ‘DynamicSQLUser’

AS

….

good article. thanks

now a question :

is the second way suggested also prevents parameter sniffing or should I use the following code:

CREATE PROCEDURE SearchHistory_Improved (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)

AS

declare @i_ProductId int

declare @i_OrderId int

declare @i_TransactionType char(1)

declare @i_Qty int

set @i_ProductId = CASE WHEN @Product IS NULL THEN ProductID ELSE @Product END

set @i_OrderId = CASE WHEN @OrderID IS NULL THEN ReferenceOrderID ELSE @OrderID END

set @i_TransactionType = CASE WHEN @TransactionType IS NULL THEN TransactionType ELSE @TransactionType END

set @i_Qty = CASE WHEN @Qty IS NULL THEN Quantity ELSE @Qty END

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistory

WHERE ProductID = @i_ProductId

AND ReferenceOrderID = @i_OrderId

AND TransactionType = @i_TransactionType

AND Quantity = @i_Qty

GO

That code you’ve posted doesn’t work.

Msg 207, Level 16, State 1, Procedure SearchHistory_Improved, Line 9

Invalid column name ‘ProductID’.

Msg 207, Level 16, State 1, Procedure SearchHistory_Improved, Line 10

Invalid column name ‘ReferenceOrderID’.

Msg 207, Level 16, State 1, Procedure SearchHistory_Improved, Line 11

Invalid column name ‘TransactionType’.

Msg 207, Level 16, State 1, Procedure SearchHistory_Improved, Line 12

Invalid column name ‘Quantity’.

Look at your SET statements. They reference columns of a table within them.

Even if you could do that, it wouldn’t work as well as the dynamic or recompiled versions because the optimiser can’t sniff the value of variables and tends to make bad guesses about cardinality

See – https://www.sqlinthewild.co.za/index.php/2008/05/22/parameter-sniffing-pt-2/

A very good post Gail. Thanks..

How does COALESCE(@Qty, Quantity) = Quantity perform?

Considering that a COALESCE is treated internally as a CASE statement, probably the same as the case statement. I’ll test it and the ISNULL option and post a followup later this week.

I’m confused. In the article it states:

Note that there’s no SQL injection vulnerability in this. The parameters are never concatenated into the string

But, looking at the code in the window, they are concatenated. What did I miss?

Gail,

I’ve done the exact same thing – just a little different.

SET @sSQL = ‘SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost

from Production.TransactionHistory

WHERE 1 = 1 ‘

IF @Product is null

SET @P1 = ”

ELSE

SET @P1 = ‘AND ProductID = @_Product ‘

.

.

.

IF @Qty IS NULL

SET @P4 = ”

ELSE

SET @P4 = ‘AND Quantity = @_Qty ‘

SET @SQL = @sSQL + @P1 + @P2 + @P3 + @P4

…

What is the cost of the reassignment of the strings each time in your scenario? Is it negligible?

Doug

Dan: Where?

The parameters that were passed to the function were never concatenated into the string. There’s no piece of code that looks like this

SET @Where = @Where + ‘AND TransactionType =”’ + @_TransactionType + ””

That’s concatenating the value of the parameter into the string and that’s what makes a piece of dynamic code vulnerable to injection.

What I have done is set up variables within the SQL string (that’s what the @_Qty and the others are) and then passed them values at execution time using sp_executesql.

Of course indexes don’t work on dynamic “where” clause, whatever you use, COALESCE, CASE, IS NULL, etc. And dynamic “where” clause is not always bad or always good. Just do smart job, check you parameters before run query. If you have some parameters on indexed column run them first and run other on subsequent. This is not some magic; it always depends on every case.

Alex, could you explain in more detail please? I’m not sure I fully understand what you’re suggesting.

Gail,

Good article – this is a common situation at my current employer, usually going unnoticed until the inappropriate plan results in a 1,000-fold increase in the execution cost.

The usual defense here is to keeep the original proc as a wrapper for variations of the original, where the variations deal with specific more targetted combinations of meaningful parameters. Of course it’s impractical to do this for every possible combination of (say) 10 possible parameters. It’s usually enough to write purpose-specific procs to avoid the major plan inefficiencies, and have the other (less diabolical) combinations fall back to the catch-all query.

As I sad it is really depend on every case, but usually, on big tables it is better to run as much better plan as you can get first and save result to some where, on temporary table for example, and after run what ever left on that result. I hope my explanation clear, English not my first language.

Alex: Yup, I think I understand you. Use some of the filters first, save to a temp table and then filter further.

Can you maybe give a short code example (on made up tables, or the Adventureworks tables) as I would like to do a followon post with some timings on various options.

Hi Gail.

This is an intersting topic and I run some tests myself to see how this works and got puzzled by one thing.

There is an index on ProductID in the Production.TransactionHistory table.

However I can not figure out why the server is not using it even if the query is as plain as this one

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost

from Production.TransactionHistory

WHERE ProductID = 331

It uses Clustered Index Scan

But if I create a new index on Quantity and run this

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost

from Production.TransactionHistory

WHERE Quantity = 100

I got Index Seek with Key Lookup

Any ideas what am I missing.

Most likely it’s due to selectivity. If those indexes aren’t covering then there’s a cost to using the NC index as SQL has to do lookups to the cluster for each row. The tipping point is usually about 0.5% of the table.

If the query needs more than that, SQL’s likely to table scan rather than doing the NC index seek and lots and lots of key lookups.

I wrote a blog entry a while back on this – https://www.sqlinthewild.co.za/index.php/2009/01/09/seek-or-scan/

Pingback: Catch all queries and indexing - SQL and the like

Gail, I must be missing something because I’ve tinkered with yoru example and no matter what I try I get errors. I repalced all the charatcers that did not copy over correctly like the single quotes but still it errors out on me.

Gail – Nevermind I figured out my problem. The sample AdventureWorks DB is set to a case senative collation.

I think that may depend on the server collation. AdventureWorks is case insensitive on my server (as are all of my DBs). After a rather nasty experience a few years back I’m allergic to case-sensitive databases 😉

Thank you so much for this article, it was very informative and extremely helpful. This is by far one of the most useful SQL articles I’ve read over the years!

I think you left out an important section from “Dynamic Search Conditions”. Instead of using this form:

WHERE (key1 = @key1 OR @key1 IS NULL)

AND (key2 = @key2 OR @key2 IS NULL)

AND (key3 = @key3 OR @key3 IS NULL

you should consider using this:

WHERE (key1 = @key1 AND @key1 IS NOT NULL)

OR (key2 = @key2 AND @key2 IS NOT NULL)

OR (key3 = @key3 AND @key3 IS NOT NULL)

As Erland Sommarskog says, “The odds are very good that SQL Server will generate a plan which seeks the three key indexes and then merges the result either by index concatenation or some other method. Here is the real big scoop: thanks to the condition, @x IS NOT NULL, SQL Server adds a filter with a startup expression to the plan, so if the corresponding variable is NULL, SQL Server will not access that index at all.”

I have found in my testing that this solution performs very well; indexes ARE used, performance is great, and dynamic code is not required.

I thought that dynamic SQL was a worse choice than the catch-all option, but this article says otherwise ? I got a big chunk of dynamic sql from a contractor and it’s harder to follow than non-dynamic. What are the drawbacks of dynamic ?

Main drawbacks of dynamic is security. Also the loss of syntax highlighting, compile time error checking and intellisense. It’s not something I’d use everywhere, just for the places it does work well (and this is one of them)

David Walker, your query isn’t logically the same. If 3 keys were passed in, your OR query would pull all rows where any one matches, not rows where all three match.

I guess Walker’s query logic can be modified as such to handle the scenario when two or three parameters are passed.

WHERE ((key1 = @key1 AND @key1 IS NOT NULL) OR (@key1 IS NULL))

AND ((key2 = @key2 AND @key2 IS NOT NULL) OR (@key2 IS NULL))

AND ((key3 = @key3 AND @key3 IS NOT NULL) OR (@key3 IS NULL))

btw, this logic would be a better approach than the dynamic query..atleast for these types of situatios…

Gail,

How would you use this methodology when working with a list of keywords aka a keyword search where a hit on any of the keywords should return a record (if there are multiple hits, just return the record once).

Frankly, if I was doing keyword searches I’d probably be using full text search.

Could you also use the WITH RECOMPILE option on the proc if you have SQL 2005 instead of 2008? Would that give the same result as the OPTION (RECOMPILE) query hint as there is only one statement in the proc?

No, you’ll still get the non-optimal plan. In 2005 the optimiser is required to create plans that are safe for reuse, even if they won’t be reused (because of recompile). Only in 2008 SP2 onwards does the recompile work properly – plans optimal for that particular parameter set.

Gail,

I have, in the past, done extract to temp table and then filter and found significant performance boost. This does depend on what indexes are present and there is at least one mandatory search parameter. So, for example, using AdventureWorks and using mandatory FromDate and ToDate for TransactionDate, the revised SQL would look like

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost

INTO #hist

FROM Production.TransactionHistory

WHERE TransactionDate BETWEEN @FromDate AND @ToDate

IF @Product IS NOT NULL DELETE #hist WHERE ProductID @Product

IF @OrderID IS NOT NULL DELETE #hist WHERE ReferenceOrderID @OrderID

IF @TransactionType IS NOT NULL DELETE #hist WHERE TransactionType @TransactionType

IF @Qty IS NOT NULL DELETE #hist WHERE Quantity @Qty

SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost

FROM #hist

So what do you do in your Dynamic query when ‘WHERE TransactionType IS NULL’ is a valid search parameter? Do you have to pass a different value and filter/build the query based on that? If you pass NULL instead of just leaving the parameter out of the EXEC that calls SearchHistory_Dynamic, is there a way to tell?

Maybe another parameter for the proc?

@TranType INT, @TranTypeIsNull BIT, …

and then add additional logic when you’re building up the dynamic SQL. Or maybe a special value for the parameter that means ‘column is null’, -1 or MinInt if the column is identity

Or use a non NULL char that is not a valid TransactionType (maybe empty string) to mean ‘ALL’

Dave, I had thought of the non-null other value and that’s a great way to do it for most of the data types, but one of the ones I’m concerned about is a bit column where 1, 0 and NULL are all valid entries.

Gail, thanks! I hadn’t thought of using 2 variables for the same column, but I think that’ll work perfectly for NULL bit columns.

Stefan. Yeah that is always a problem especially when extended to dates 🙁

For bit columns you could use and translate a tinyint for bit column selection, ie 0=0 1=1 2=NULL 3=ALL

I have done this before, as long as both ends talk the same translation and data type not a problem although the use of a different data type may be unconfortable.

Hey thanks for these good examples. I’m working in a query where the user enters different parameters and still don’t know which option I’m going to take. I have done dynamic SQL before but I don’t feel very confident about that. Still as you were saying, the performance could be way better than having several conditions in the WHERE clause.

Pingback: Be wary of using ISNULL() for retreiving all rows « Simran Jindal's Blog

Hi Gail

Wanted to say thanks (way too late though..) for this really great post.

Dunno how often I referred people here till now and just did it again.

Greets

Flo

Hi

i want to find the combination of Amounts…

For Example : i am inputing amount in textbox vb.net, and result should be all the combination of that entered amount in DB.

textbox.text = 500 then result = lets say

1. 500

2. 250, 250 (in 2 rows)

3. 100,100,100,100,100 (in 5 rows)

is this possible?

Someone can help me on this Please..

My E-mail Id is <removed>

Regards,

Dipesh

Please post this somewhere like the MSDN forums. There’s no where near enough information given, and your question has nothing to do with this post.

Hi, i’m new to sql and i have this problem with updating data from another table.

i want to update table2.message based on the criteria of table1.name. for example, all records named John will be updated with ‘Msg1’ in table 2.message. Im using MS SQL 2000 and below is the scenario.

<editor: removed>

do you have suggestion on how to update this in bulk without preselecting all the names?

Lin, best if you post this on a SQL forum, like the ones at MSDN or SQLServerCentral. Your question has nothing to do with the post.

Gail

just to let you now I’ve referenced this page from something I’ve posted to my site. http://glossopian.co.uk/pmwiki.php?n=Main.TestScriptGenerator

I hope you enjoy it.

Nice. I’d leave a comment there, but I don’t see any way to do so.

Hi Gail, Niice article, I wish I could follow the dynamic SQL route but am still struggling alomng with SQL 2005. I got around the inefficiency by intoducing pagination to my query. I get 10 (or 20,30 etc) records key fields with all the parameters (the query itself is very convoluted, so not going to even attempt to post it), then get the detail I need in a following query. This and other changes (many of my parameters are multi-select, so used a CLR to split into mem tables), took a query that was taking well over a second (usually about 1600ms) down to <100ms even for the worst culprits.

The dynamic SQL route works fine in SQL 2005. In fact, on 2005 it’s the best option because you can’t use the OPTION(RECOMPILE)