Good day everyone and welcome to another week of SQL University. I know we’re getting close to the end of the year and everyone’s looking forward to a nice long vacation soaking up the sun at the beach, but a little bit of attention would be nice. Thank you.

This week is Advanced Indexing, and I mean advanced, none of that selectivity, SARGable, predicate stuff that gets repeated all over the place. If you need a refresher on the basics before we get started, the following can be considered pre-requisite reading for this course

- Introduction to Indexes, Part the First

- Introduction to Indexes, Part the Second

- Introduction to Indexes, Part the Third

- Recommendations for a Clustered Index

- Index Usage

There’s also some additional background material available for more enthusiastic students:

- Introduction to Indexes

- Introduction to Indexes: Part 2 – The clustered index

- Introduction to Indexes: Part 3 – The nonclustered index

- Index Internals (Video)

- The Clustered Index Debate (Video)

- Index Selectivity and Column Order

Right, now that the admin has been handled, let’s get straight into things. Nothing like starting at the deep end…

Most people would rightly associate indexes with where clause predicates and joins, after all, the main usage of an index is to reduce the rows in consideration for a query as fast as possible. However there’s another portion of your queries that indexes can, if appropriately designed, help with – grouping and sorting.

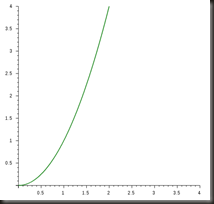

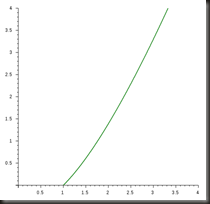

Sorting is an extremely expensive operation, especially on large numbers of rows. For the academics in the audience, the algorithmic complexity of sorting is above linear, the time to sort a set of data increases faster than the number of items in the list. The common sorting algorithms have an average time complexity of O(n log n). It’s better than O(n2), but it can still hurt at the higher row counts.

O(n2) on the left, O(n log n) on the right (Thanks to QuickMath)

Right, the non-academics can wake up now.

The other reason that sorting hurts is that it needs a memory grant to do the sort. If there’s a shortage of memory the query could have to wait a while for the memory to be granted and, if the optimiser mis-estimates the number of rows to be sorted, the memory grant could be insufficient and the sort would have to spill into TempDB. You don’t want that happening.

Finally, sort is a blocking operator in the execution plan (all rows must have been fetched from the source before any output can be returned), and so the client won’t start seeing rows returned until the entire sort has been completed. This can make the query feel like it takes longer than it really does.

Grouping and aggregation are much the same. To aggregate one set of values based on another set of values, SQL has to get all the like values of the grouping columns together so that it can do the aggregation. That sounds suspiciously like a sort doesn’t it?

SQL doesn’t always sort to do aggregations, but the alternative – hash tables – isn’t exactly free (homework exercise – read up on hash tables)

So for both sorting and grouping, the query processor’s job would be a lot easier if there was some way that it could get the data ordered by the sorting or grouping columns without having to do the work of actually sorting. Sounds impossible? No.

Enter indexes. The b-tree structure of an index makes it great for quickly finding matching rows, but today we’re more interested in the leaf-level of the index than the upper levels. The leaf level of an index is logically ordered by the index key (not physically ordered, let’s stop that myth right here please).

The leaf level of an index is logically ordered by the index key columns. So, if the index leaf level is read, it can return the data in the order of the index key. If that order is what the query processor needs in order to process a sort or grouping, then there’s no need for an expensive sort to be done, the underlying order can be leveraged.

Now, before someone misquotes me and goes off crying out that data is always returned in the order of the index used, no it is not. If there’s no order by on a query, there’s no guarantee of order regardless of indexes. However if there is an order by, the query optimiser and query processor may be able to utilise the underlying index order and avoid the cost of actually sorting the data.

Ok, enough theory for now, let’s look at some practical examples. We’ll use AdventureWorks here and I’m going to use the TransactionHistoryArchive table (in the Product schema)

Firstly a simple (and silly) example:

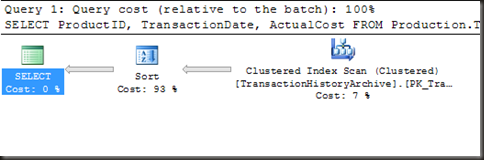

SELECT ProductID, TransactionDate, ActualCost FROM Production.TransactionHistoryArchive ORDER BY ActualCost

This one’s not very realistic, but it’s a nice simple one to start with. Give me all the rows in the table ordered by the ActualCost column. There’s no filter here so many would say that indexes can’t help here, and it’s true that an index can’t help with finding rows (because all are required), but it can help.

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 1419, physical reads 0.

SQL Server Execution Times:

CPU time = 421 ms, elapsed time = 4855 ms.

421ms of CPU time, with an estimated cost of 93% for the sort. Let’s see if we can make this any better.

If I create an index on ActualCost, that gives SQL the option of scanning the index (scan, because there’s no seek predicate) to retrieve the data in the order of the ActualCost column. I’m going to have to make it a covering index, as there is no way at all that SQL will willingly do key lookups for every single row of the table.

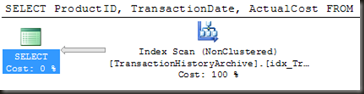

CREATE NONCLUSTERED INDEX idx_TransactionHistoryArchive_ACtualCost ON Production.TransactionHistoryArchive (ActualCost) INCLUDE (ProductID, TransactionDate)

Let’s see how that’s changed the query’s execution.

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 682, physical reads 0.

SQL Server Execution Times:

CPU time = 47 ms, elapsed time = 4073 ms.

The reads have dropped slightly, because we’re now scanning a nonclustered index which is smaller than the clustered index, but that’s not the main point here. The CPU usage has dropped by around a factor of 8. 421ms down to 47ms. If this was a critical query that ran several times a minute then this change could make a nice improvement to throughput and overall CPU usage.

That was a silly example, let’s try for something a little more complex:

SELECT ProductID , TransactionDate , TransactionType , Quantity , ActualCost FROM Production.TransactionHistoryArchive WHERE TransactionType = 'S' AND ReferenceOrderID = 51739 ORDER BY TransactionDate

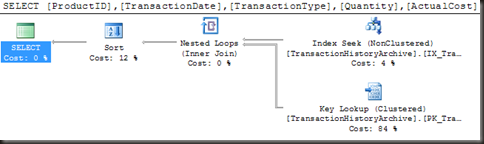

If we look at the execution plan, there’s already an index in use. There’s an index on ReferenceOrderID, but it’s clearly not as good as it could be.

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 222, physical reads 0.

SQL Server Execution Times:

CPU time = 0 ms, elapsed time = 1 ms.

Well, it’s not exactly taking ages on the CPU (it’s a tiny resultset), but this can still be better than it is. The key lookup is there because the existing index is only on two columns – ReferenceOrderID and ReferenceOrderLineID. I can’t modify that index for this query without potentially breaking some other query’s use of it, so I’ll create a new index.

CREATE NONCLUSTERED INDEX idx_TransactionTypeReferenceOrderID ON Production.TransactionHistoryArchive (TransactionType, ReferenceOrderID) INCLUDE (ProductID, TransactionDate, Quantity, ActualCost)

Why TransactionType first? Come back on Friday and I’ll be discussing that.

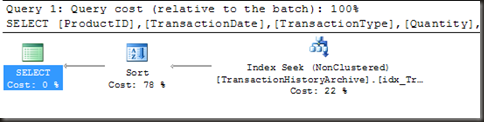

Plan’s now much simpler, the key lookup has gone, and now the sort is the majority of the cost

The key to getting rid of that sort is to understand that a multi-column index is sorted by the entire key. So, if we have an index on Col1, Col2, then for rows where Col1 has the same value, those rows are listed within the index ordered by Col2. In other words, if we read the leaf level of that index it would look like this:

Col1 Col2 A 1 A 3 A 8 B 4 B 8 B 9 B 14 C 0 \C 1

And so on and so on. Hence, if I filtered that by Col1 = B, the resulting rows could be returned ordered by Col2 with no additional work.

So in the above case, if I add the sort column (TransactionDate) as a key column in the index (it’s currently an Include column), then once the filter on TransactionType and ReferenceOrderID is done, the qualifying rows can be read in order of TransactionDate.

Let’s drop the index we created and create one with TransactionDate as an additional key column.

CREATE NONCLUSTERED INDEX idx_TransactionTypeReferenceOrderID ON Production.TransactionHistoryArchive (TransactionType, ReferenceOrderID, TransactionDate) INCLUDE (ProductID, Quantity, ActualCost)

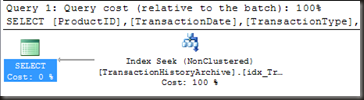

Success, the sort has gone!

One last example, then we’ll take a brief look at group by before finishing up for the day.

SELECT ProductID , TransactionDate , TransactionType , Quantity , ActualCost FROM Production.TransactionHistoryArchive WHERE ActualCost > 2500 ORDER BY TransactionDate

Can I use the same technique here to remove the need for a sort?

Let’s look at that simplistic index that I described earlier and see how the inequality would play out.

Col1 Col2 A 1 A 3 A 8 B 4 B 8 B 9 B 14 C 0 C 1

If I filter that for Col1 > ‘A’, are the resultant rows ordered by Col2?

No, because the filter on Col1 is an inequality, a filter that returns multiple different values of Col1, the results aren’t ordered by the second column and hence we can’t use an index here to both support the filter and the order.

Given this one, we could create an index to support the filter and have SQL sort the rows that qualified or we could create an index to support the order by and have SQL scan that and filter out rows that don’t match.

In general, the first option is the one that will be best in the majority of cases. The primary use of an index is to locate rows and filter resultsets. There are cases where the alternative may be appropriate, if the vast majority of the rows in the table qualify for the filter then it may be more optimal to support the sort and let SQL scan and filter. Definitely not the usual case though.

That about wraps up sorts, now for a quick look at group by.

As mentioned earlier, SQL has two ways to process grouping, it can do what is called a Stream Aggregate or it can use a hash table and do a Hash Aggregate. Stream Aggregate requires that the resultset be sorted in the order of the grouping columns. Well, given that fact and that we’ve spend a lot of time showing how to use indexes to support a sort, this should be quick and easy.

Let’s dive straight into some examples, because the theory is the same as for the sort described earlier

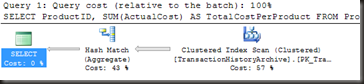

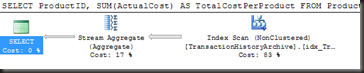

SELECT ProductID, SUM(ActualCost) AS TotalCostPerProduct FROM Production.TransactionHistoryArchive GROUP BY ProductID

This currently executes as a hash aggregate.

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0, read-ahead reads 0.

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 1419, physical reads 0.

SQL Server Execution Times:

CPU time = 47 ms, elapsed time = 46 ms.

If we want a stream aggregate, then we need to get the rows entering the aggregation ordered by ProductID. SQL is not going to willingly sort the entire resultset (the hash aggregate is cheaper), so the only way we’re going to get a stream aggregate (other than with a hint) is by adding an index so that the data can be read from the index already ordered. There’s no filter here so it’s a straightforward index

CREATE NONCLUSTERED INDEX idx_TransactionHistoryArchive_ProductID ON Production.TransactionHistoryArchive (ProductID) INCLUDE (ActualCost)

Table ‘TransactionHistoryArchive’. Scan count 1, logical reads 482, physical reads 0.

SQL Server Execution Times:

CPU time = 31 ms, elapsed time = 34 ms.

Not a massive improvement, but the principle is there.

Now, for homework, take these two queries and see firstly if it is possible to create an index to support both the filter and the group by and, if so, identify what that index is.

Question 1

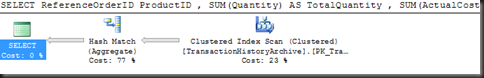

SELECT ReferenceOrderID ProductID , SUM(Quantity) AS TotalQuantity , SUM(ActualCost) AS TotalCost FROM Production.TransactionHistoryArchive WHERE TransactionType = 'S' GROUP BY ReferenceOrderID, ProductID

Question 2

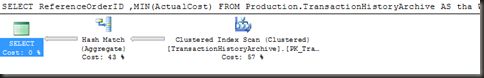

SELECT ReferenceOrderID ,MIN(ActualCost)| FROM Production.TransactionHistoryArchive AS tha WHERE TransactionDate > '2004-01-01' GROUP BY ReferenceOrderID

Edit: And (as a late clarification) assume that the filter on transaction date is not always the same date.

Enough for today. Same time, same place Wednesday for a look at indexes on part of a table.

Are there suppose to be INCLUDE columns in the first 2 indexes? I am not getting a change in the execution plan after running the queries. If I add the additional columns in the query as INCLUDE columns, then it works like yours.

Thanks,

Thomas

Which ones? All the indexes have include columns specified.

Gail,

I am guessing my browser (IE 8), does not display the whole CREATE INDEX text, because when I highlight the 3rd create index and copy/paste, the include part shows up. I think it is a display problem with the blog.

Thanks,

Thomas

By the way, I am looking foward to the whole week of posts from you. This is good stuff.

Thanks,

Thomas

Javascript off perhaps? I tested on IE8 and it’s displaying fine here. https://www.sqlinthewild.co.za/?attachment_id=1337

Thanks a for a great post Gail. You really make this simpler to understand. Here are my indexes

Query 1

CREATE NONCLUSTERED INDEX idx_TransactionHistoryArchive_1

ON Production.TransactionHistoryArchive (TransactionType, ReferenceOrderID, ProductID)

INCLUDE (Quantity, ActualCost)

Query 2

CREATE NONCLUSTERED INDEX idx_TransactionHistoryArchive_2

ON Production.TransactionHistoryArchive (ReferenceOrderID, TransactionDate)

INCLUDE (ActualCost)

WHERE TransactionDate > ‘2004-01-01’

The order of columns in the index definitely makes a difference. Looking forward for more such posts.

Thanks

Interesting 2nd answer. Wasn’t quite what I intended, but that’s my fault for not being clearer.

I just focused on the problem at hand. In real life, this is not something I would create because the TransactionDate could be greater than any date. But, I wasn’t able to get rid of the SORT operator any other way. Would love to see alternative solutions.

The intention was that the TransactionDate would vary, and I think you missed half of the question. It was ‘Can you create an index and, if so, what would it be?’

Thanks for clarifying. Sorry I should have paid attention myself.

Here’s the new index

CREATE NONCLUSTERED INDEX idx_TransactionHistoryArchive_2

ON Production.TransactionHistoryArchive (ReferenceOrderID, TransactionDate)

INCLUDE (ActualCost)

no sort, index scan and stream aggregate

The Gail, the big thank you.

Pingback: Various DBA Notes « 36 Chambers – The Legendary Journeys: Execution to the max!

I’m a newbie to indexes and I’m confused…

You’ve mentioned: “The leaf level of an index is logically ordered by the index key (not physically ordered, let’s stop that myth right here please).”

In the Clustering Index Debate lecture linked here, Kimberly says data is ‘physically’ stored in the order of the index key…

So what i gather is: A clustered index is stored physically and a non-clustered logically. is it?

Nope. Logically only for both.

SQL will try to put a new index down in physical order (clustered and nonclustered alike), but there’s no guarantee it will stay that way. If the clustered index was always stored in physical order of the index key, a clustered index would always have 0% logical fragmentation, as logical fragmentation is the measure of how badly the logical and physical orders differ.

Since I’m sure you agree that clustered indexes can indeed have a non-zero logical fragmentation, it thereby follows that the clustered index is not always stored in physical order.

Right got it thanks. 🙂