In a similar vein to last week’s blog post… I heard an interesting comment recently. “Change that Column != 2 to a Column > 2 or Column < 2 combination, it can use indexes better.”

Sounds like something that clearly needs testing!

I’ll start with simple numbers table.

CREATE TABLE dbo.Numbers ( Number INT NOT NULL ); ALTER TABLE Numbers ADD CONSTRAINT PK_Numbers PRIMARY KEY CLUSTERED (Number);

and put 1 million rows into it

INSERT INTO dbo.Numbers (Number) SELECT TOP (1000000) ROW_NUMBER() OVER (ORDER BY (SELECT 1)) FROM msdb.sys.columns c CROSS JOIN msdb.sys.columns c2;

Let’s start, before we get into comparing things, with looking at the execution plan of a query with a != (or <>) operator.

SELECT Number FROM Numbers WHERE Number <> 12742; -- because 2 is on the first page of the index, and I don’t want any special cases here

That’s kinda complicated for a query with one table and one predicate. Let’s look at in pieces. The easiest place to start is the Clustered Index Seek. The seek predicate on the clustered index seek is

Seek Keys[1]: Start: [Test].[dbo].[Numbers].Number > Scalar Operator([Expr1009]), End: [Test].[dbo].[Numbers].Number < Scalar Operator([Expr1010])

Hmm…Looks like the parser/optimiser has already made our intended change for us. There’s some funky stuff in the top part of the plan, but what it’s essentially doing is generating two rows for the nested loop join, both with just the value that we’re excluding from the query, then the seek runs twice. I suspect that’s once for the less than 12742 and once for the greater than 12742 portions of the original predicate.

But, let’s do the full due diligence, the plan may not tell the whole story.

The performance numbers for the inequality form of the query, gathered via Extended Events and aggregated with Excel are:

Duration 122ms

CPU 105ms

Logical reads: 1619

This is our baseline, the numbers we’re comparing against. If the comment mentioned at the beginning is correct, then the revised query will have a significantly better performance.

The revised query is:

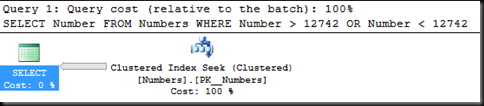

SELECT Number FROM Numbers WHERE Number > 12742 OR Number < 12742;

Execution plan is much simpler, no constant scans, no joins. Just a single index seek operation that executes once.

Is is better though?

Duration: 126ms

CPU: 103ms

Logical reads: 1619

No, it’s not.

Yes, we have a simpler plan, but we do not have a more efficient query. We’re still reading every page in the index and fetching all but one row of the table. The work required is the same, the performance characteristics are the same.

But, maybe, if the numbers aren’t unique and we’re excluding more than just one row it’ll be different.

That needs a slightly different table to test on.

CREATE TABLE MoreNumbers ( SomeNumber INT NOT NULL, Filler CHAR(100) ); CREATE CLUSTERED INDEX idx_MoreNumbers ON dbo.MoreNumbers(SomeNumber); GO INSERT INTO dbo.MoreNumbers (SomeNumber, Filler) SELECT TOP (500000) NTILE(50) OVER (ORDER BY (SELECT 1)), '' FROM msdb.sys.columns c CROSS JOIN msdb.sys.columns c2;

I’m just going to look at the performance characteristics this time. The execution plans are the same as for the earlier query. The two queries are:

SELECT * FROM dbo.MoreNumbers WHERE SomeNumber != 24; SELECT * FROM dbo.MoreNumbers WHERE SomeNumber < 24 OR SomeNumber > 24;

Query 1:

Duration 97ms

CPU 77ms

Logical Reads 7624

Query 2:

Duration 98ms

CPU 75ms

Logical Reads 7624

Again identical.

Just like with the pointless WHERE clause predicate last week, we have a query change that has had no effect on the query performance. Now, to be honest, there are some query form changes that can improve performance. For example, converting a set of OR predicates to UNION can improve query performance sometimes (and leave it unchanged in others), and so these kinds of rewrites do need to be tested to see if they’re useful.

More importantly though, those of us who are posting on forums and advising others have a responsibility to do these tests before we recommend changes to others, as they may very well not do them. If we don’t, we’re propagating myths and not helping the advancement of our field.

🙂 Love it Gail!

Hi Gail,

I started reading your blog a few months ago, and wanted to let you know how awesome I think it is. I really love the way you write as well as the amount of detail you provide.

Thanks,

R

Another great post, Gail. I’ve been a fan of yours since I saw you speak at PASS in 2009. Thanks again!

Really appreciate your message here. Even if there was a small inefficiency using != (which you showed there is not), IMO the simpler SQL statement is still worth it, as it is so much more clear. The problems we solve provide us with ample complexity; we don’t need to add to it.

Thanks!

Another great post from a SQL Deity.

Your acknowledgment of the OR to UNION scenario just goes to show the “it depends” answer is almost always in play.

Wish I could post more comments that might help in SQL forums but my ADHD kicks in before I get to testing and SQUIRREL!

Kris

well written. I worked through the examples as I thought originally you were indicating that a > AND < would operate the same, which of course isn't the case. Nice example of a walk through myth buster 🙂 subscribed on feedly!

Hi Gail,

Almost 3 years later and I stumbled upon this post. Recently we actually had an interesting situation at my workplace that touched on this topic. We had a developer using LING in conjunction with Entity Framework to query a table with a filter on an indexed bit field.

The query went something like: `.Where(p => p.Active != false)`. This was translated verbatim into SQL and the query ran for 3 seconds to produce a result set of approx. 2k rows. When we changed the `!= false` to `== true` the query suddenly returned in under 200 ms. No adverse index fragmentation or out of date statistics.

Do you have any inclination as to what may / may not be the cause of this?

Without the query or query plan, no. Not enough information to even guess