A common problem when looking at execution plans is attributing too much meaning and value of the costs of operators.

The percentages shown for operators in query plans are based on costs generated by the query optimiser. They are not times, they are not CPU usage, they are not IO. The costs that the query optimiser generates are unit-less numbers that it uses internally to estimate the relative expense of plans as it optimises a query.

For starters, the percentages of operators should add to 100 across the entire plan, so worrying that the only data access operator in a simple query plan shows 100% is useless. If there’s only one index seek/scan in the plan, of course it’s going to be close to 100% of the total cost of the plan, there’s no where else for the cost to go.

But that’s not all. The accuracy of these estimates is based, in part, on the accuracy of the row estimations. If the statistics are out of date or there are any other row estimation errors, then the costs and as a result the percentages shown will be wildly incorrect

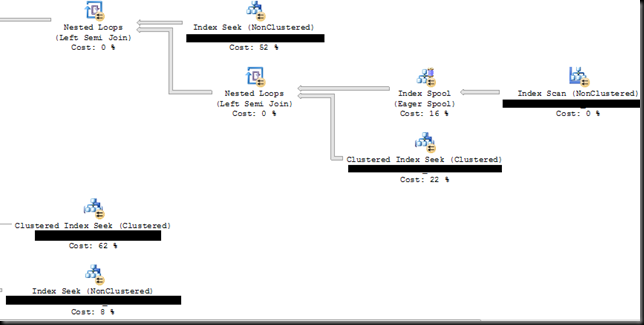

Take, for example, this query plan.

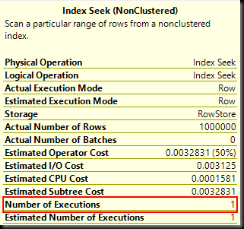

According to the percentages, the two operators were of equal cost. But a key lookup is just a single-row clustered index seek by a different name, and if we look at the number of executions of the two operators, it’s clear that they cannot possibly have been the same cost to execute

An index seek to return 1 million rows and a million index seeks to fetch one row each are not going to take the same amount of resources to execute, and hence those percentages are completely misleading.

Because the percentages can easily be way out, focusing on them when performance tuning is potentially going to result in a lot of wasted time. There is no single value, counter, measure or result that’s going to by itself indicate the cause of performance problems. Obsessing over single data points, or focusing on changing a single data point is almost certainly going to waste time.

Oh, and if anyone still wants to attribute importance to the percentages…