Keynote opened with announcements regarding the PASS board. There was a very emotional speech from Wayne Snyder as the board said goodbye to Kevin Kline, as he steps down from the PASS board after 10 years.

I first met Kevin when I attended the European conference in 2005 (in Munich). I didn’t know anyone and I didn’t know very much. Kevin was at the opening party and he was talking to everyone there, making sure that they felt welcome and that they were engaged in the conversation. There was no feeling that he was faking interest, he was genuinely interested in what people were doing with SQL.

The changes to the board are, as well as the new board members, Wayne Snyder becomes "Immediate past president" and Rushabh Metah takes the role of President.

After the board announcement we get the keynote ‘tax’, long, boring discussion by a Del person. Configuration management, consolidation. Some waffle about disaster recovery, but without any meat. Or maybe consolidation. Haven’t quite worked it out. If this was a session, he’d be talking to an empty room.

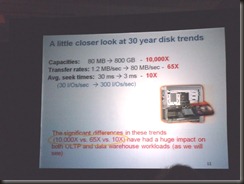

David DeWitt’s presentation is looking at the historical and future trends of database platform changes. The improvement in CPU power way outstrips the improvement in disk speed. Hopefully SSDs will fix that.

The disk trends that he’s discussing are scary. Relatively speaking (considering the size of the disks today), drives are much slower than they were 20 or so years ago. Sequential reads are faster than random by a greater factor than some years back. Random reads hurt.

CPUs are faster, waay faster than they were years ago. Accessing memory takes more cycles than historically, like 30x more.

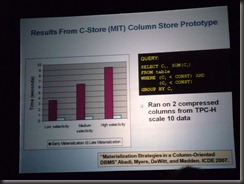

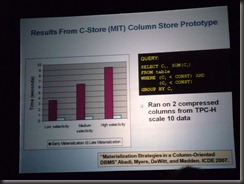

The way databases are currently designed, they incur lots of L1 and L2 cache misses. L2 cache miss can stall the CPU entirely for 200 cycles. This is why it’s so hard to max out modern processors. They spend so much of their time waiting. What makes it worse is that the cache lines are only 64 bytes wide. If a row is more than 64 bytes wide, moving a row from one cache to another will require more than one cycle and potentially more than one cache-miss. Changing database architecture to a column-store may alleviate this.

Compression is a CPU/disk tradeoff and that’s fine. CPUs are 1000x faster now and disk only 65x faster. Hence use some of those CPU cycles to help the poor disks along.

Some very interesting discussions on compression with column-stores. Store the data multiple times in different orders. David: "After all, we need to do something with those terabyte drives"

Run-length encoding works so well with a column store, especially if the columns are stored ‘in order’. Dictionary compression is good if the columns are not in order.

Hybrid model is also an option. Some of the benefits of the two, some downsides of the two. Lot of academic papers on those options. Search Google Scholar if interested

Some photos from David’s presentation