Resources from March usergroup meeting

I’ve uploaded some of the material from the last usergroup meeting.

Have fun.

A discussion on SQL Server

I’ve uploaded some of the material from the last usergroup meeting.

Have fun.

13 March 2018: There is an updated post on this subject – https://www.sqlinthewild.co.za/index.php/2018/03/13/revisiting-catch-all-queries/

The query form that I refer to as ‘Catch-all’ typically results from search screens in the application where the user may enter any one (or more) of a number of optional parameters. One of the more common ways for such a query to be written in SQL is with multiple predicates in the where clause of the form (WHERE SomeColumn = @SomeVariable OR @SomeVariable IN NULL)

Now this does work, the problem is that it works fairly inefficiently and, on large tables, can result in really poor query performance. I’m going to take a look at why that is the case and what alternatives there are.

Erland Sommarskog has written on this as well, and in a lot more detail than I’m going to. His article on dynamic search conditions is well worth reading, as are the rest of his articles.

A typical example of a ‘catch-all’ query would be this one, based off a table in the AdventureWorks database.

01 02 03 04 05 06 07 08 09 10 | CREATE PROCEDURE SearchHistory(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)ASSELECT ProductID, ReferenceOrderID, TransactionType, Quantity,TransactionDate, ActualCost from Production.TransactionHistoryWHERE (ProductID = @Product Or @Product IS NULL)AND (ReferenceOrderID = @OrderID OR @OrderID Is NULL)AND (TransactionType = @TransactionType OR @TransactionType Is NULL)AND (Quantity = @Qty Or @Qty is null)GO |

Now, let’s say that I run that query and pass values for the ProductID and the Transaction type. Let’s further say that there’s a nonclustered index (called idx_TranHistory_TranTypeProductID) on those two columns.

1 | EXEC SearchHistory @Product = 978, @TransactionType = 'W' |

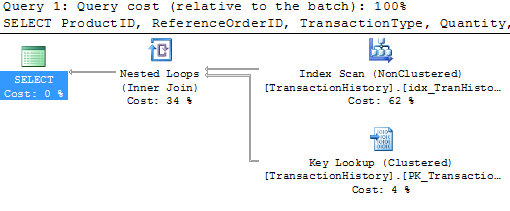

Now this returns 52 rows out of 980000 that are in the table, so we’d expect that SQL would use an index seek operation on that index, followed by a bookmark lookup.

Nope. It’s using that index all right, but it’s doing a scan, not a seek. Ok, not great, but not bad. Let me try a different set of parameters

1 | EXEC SearchHistory @Qty = 100 |

The plan’s exactly the same. No surprise, it was cached the first time and then reused. There’s a problem here though, the index that’s used is completely inappropriate and there’s a bookmark lookup that ran almost a million times. No wonder this execution took 3 seconds and 2,949,715 IOs to return 29 rows.

Ok, so let me try a different form of the catch-all query

1 2 3 4 5 6 7 8 9 | CREATE PROCEDURE SearchHistory_Improved(@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)ASSELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCost from Production.TransactionHistoryWHERE (ProductID = CASE WHEN @Product IS NULL THEN ProductID ELSE @Product END)AND (ReferenceOrderID = CASE WHEN @OrderID IS NULL THEN ReferenceOrderID ELSE @OrderID END)AND (TransactionType = CASE WHEN @TransactionType IS NULL THEN TransactionType ELSE @TransactionType END)AND (Quantity = CASE WHEN @Qty IS NULL THEN Quantity ELSE @Qty END)GO |

Let’s see what that does for the first test:

1 | EXEC SearchHistory_Improved @Product = 978, @TransactionType = 'W' |

Well that’s no better. Full blown table scan.

The problem with these types of queries is that there is no stable plan. The optimal plan differs completely depending on what paramters are passed. The optimiser can tell that and it plays safe. It creates plans that will always work. That’s (one of the reasons) why in the first example it was an index scan, not an index seek.

The downside of the safe plan is that it’s highly unlikely to be a good plan and, even if it is, it won’t be good for all possible combinations of plans.

So, how to handle this type of query? Well, there are typically two ways.

Recompile

This is only an option on SQL 2008. On 2008, if the query is specified with the OPTION (RECOMPILE) hint, then the optimiser knows it doesn’t have to worry about safe plans because the plan will never be reused. In fact, if I add that hint to the query in the first example, I get the expected index seek.

Dynamic SQL

The other option is to build up the query string dynamically, based on the parameters passed and then to use sp_executesql to run it. There are the ususal downsides to dynamic SQL but, it may be that the performance improvement is worth it.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 | CREATE PROCEDURE SearchHistory_Dynamic (@Product int = NULL, @OrderID int = NULL, @TransactionType char(1) = NULL, @Qty int = NULL)ASDECLARE @sSQL NVARCHAR(2000), @Where NVARCHAR(1000) = ''SET @sSQL = 'SELECT ProductID, ReferenceOrderID, TransactionType, Quantity, TransactionDate, ActualCostfrom Production.TransactionHistory 'IF @Product is not nullSET @Where = @Where + 'AND ProductID = @_Product 'IF @OrderID is not nullSET @Where = @Where + 'AND ReferenceOrderID = @_OrderID 'IF @TransactionType IS NOT NULLSET @Where = @Where + 'AND TransactionType = @_TransactionType 'IF @Qty IS NOT NULLSET @Where = @Where + 'AND Quantity = @_Qty 'IF LEN(@Where) > 0SET @sSQL = @sSQL + 'WHERE ' + RIGHT(@Where, LEN(@Where)-3)EXEC sp_executesql @sSQL,N'@_Product int, @_OrderID int, @_TransactionType char(1), @_Qty int',@_Product = @Product, @_OrderID = @OrderID, @_TransactionType = @TransactionType, @_Qty = @QtyGO |

Note that there’s no SQL injection vulnerability in this. The parameters are never concatenated into the string and the execution is parametrised.

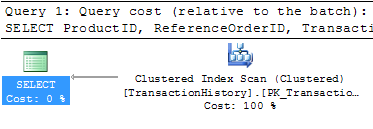

Now each different set of parameters gets a different cached plan, optimal for that particular set of parameters.

1 2 3 | EXEC SearchHistory_Dynamic @Product = 978, @TransactionType = 'W'EXEC SearchHistory_Dynamic @Qty = 100 |

The first gets an index seek, the second a clustered index scan (because there’s no index on Quantity). Much better than the behaviour with the earlier non-dynamic versions.

The next SQL Server user group will be on the 17 Mar 2009 6:30PM at the Microsoft Offices in Bryanston (3012 William Nicol Drive) Auditorium 1

We will have 2 session, one on Powershell and one on Auditing in SQL Server.

Paul Filmalter will be doing the Powershell session and Paul Els the Auditing on SQL Server.

So come and join us and have some fun.

Hope to see you all soon and please help us build the community by encouraging at least one friend or colleague to join us.

—

Paul

Most people who know SQL execution plans will say, without reservation, that an index seek on a particular index is better than an index scan on the same index. In the vast majority of cases, that’s true, but there are times when what appears in the execution plan as an index seek is actually an index scan.

Let me show an example

1 2 3 4 5 6 7 8 9 | CREATE TABLE TestingSeeks (id int identity (1,1) not null,SomeStr char(6) default '' -- a filler)GOinsert into TestingSeeks (SomeStr)select top (500000) ''from sys.columns c1 cross join sys.columns c2 |

We have a table here with an identity column on it, starting at 1 and incrementing by 1 row. Hence, there will be no negative values in the table. I’m going to then put a nonclustered index on that column (the table has no cluster, it’s a heap)

1 | CREATE NONCLUSTERED INDEX idx_Seek ON TestingSeeks (id) |

Fair enough. If I query all the rows in the table and retrieve just the ID column, I’ll get a scan on that index, as is pretty much expected and Statistics IO tells me that 935 pages were read

The first public CTP of 2008 SP1 is now available – http://blogs.msdn.com/sqlreleaseservices/archive/2009/02/23/sql-server-2008-service-pack-1-sp1-ctp.aspx

Grant (again) tagged me on another of these blog memes, this time started by Mike Walsh. What do I know now that I didn’t know when I started out. I’m very late on answering it, I blame that on a business trip, a pile of work I still haven’t found the bottom of, and a complete lack of enthusiasm to do anything constructive.

1) “I don’t know” is not a sign of weakness

When I started in IT, I knew everything (at least I thought I did). Furthermore I thought I had to know everything. That lead to a number of problems, from ignoring offers for help, to tackling things beyond my abilities, right up to embarrassing myself in front of management after it became apparent I didn’t really know what I was talking about.

2) Beware of self-proclaimed experts

Early on I worked with a database guru. At least, he said he was. Looking back now, older and (perhaps) wiser, many of his ‘best practices’ were nothing of the sort.

Anyone can go around claiming to be an expert. The thing is to see what other people say about them

3) Setbacks are not disasters

I was retrenched from the first company I worked for when they hit financial difficulties. I spent 6 months struggling to make ends meet. At the time I thought it was the worst thing possible. However it lead to a job that changed my focus to databases, leading to where I am now.

It also taught me what some of my major weaknesses are – administration (finding work, chasing payments, etc)

4) Usergroups are not a waste of time

I thought that when I started out (see point 1). Now I run one. Go figure

I think just about everyone’s answered this already, so no tags.

Registrations are now open for South African DevDays 2009.

Durban – 10th March

Cape Town – 17th March

Johannesburg – 26th March

For more info and to register – http://www.microsoft.com/southafrica/devdays/default.mspx

I’ve had several requests for the presentation and demos from my presentation at PASS USA last year , so here they are. This is the presentation that I also did at the January SQL usergroup meeting and at the February meeting of SADeveloper in Durban

The demos are for SQL 2008 and use the SQL 2008 AdventureWorks database (downloadable from Codeplex), but they should work without too many problems on SQL 2005 against the 2005 version of AdventureWorks

Presentation – Dirty Dozen

Demos – https://www.sqlinthewild.co.za/PASS08/Demos.zip

The setup script adds a couple indexes, a column and a fair bit of data, so take a backup of Adventureworks before running that if you want to be able to get back to the original version.

After an interesting discussion on SQLServerCentral last week, I realised that the terms ‘estimated execution plan’ and ‘actual execution plan’ are perhaps a little bit misleading.

The only thing estimated about the estimated execution plan is the rowcounts, costs and row size. The plan itself isn’t an estimate. It’s not as if the optimiser, when asked for an estimated plan, does a less thorough job than when asked to compile a plan for a query’s execution.

The two forms of execution plan are better described as ‘execution plan with run-time information’ and ‘execution plan without run-time information’

When, in Management Studio, someone clicks the ‘display estimated execution plan’ button, the query is submitted to SQL Server, parsed and bound, algebratised and optimised just as if it was going to be executed. But the query is not executed, and as such, the plan when returned contains no run time information.

If there is a matching cached query plan, that cached plan is what’s returned and no optimisation is done. This can be seen by using profiler with the Cache hit, cache miss and cache insert events being traced.

When, in Management Studio, the query is run with the execution plan option enabled, the query is submitted to SQL Server, parsed and bound, algebratised, optimised and executed. The returned plan does contain the run-time for that specific execution, hence the plan contains things like ‘actual row count, actual IO cost’, etc

If there’s a matching query plan in cache then that cached plan will be used for the query’s execution and will be the one returned, though with the run-time information added

When a plan is cached, only the compile-time information is cached. The detailed run-time information on the actual number of rows and actual executions is discarded after updating the aggregated query stats. Hence, when you retrieve a query from the plan cache, it will not contain the run-time information. Consider a plan that’s been used 20 times. Which execution’s run-time information would it contain? Remember that there’s only one plan in cache per procedure.

Hence, a plan fetched from cache will be identical to the plan returned by requesting the estimated execution plan for a specific query (Providing there’s nothing happening to invalidate the cached plan)

Profiler can capture (depending on event) the plan without the run-time information or the plan with the run-time information. There’s a nice table in chapter 2 of one of Itzik’s books that shows the various profiler events, when they fire and what they return.

If not go listen to this podcast – http://runasradio.com/default.aspx?showNum=81. If you think you know perfmon well, go listen to it anyway.

There’s also a video and an article linked in the show’s notes which are referred to several times in the podcast.

Top things I learned from that: