The most optimal join type

What’s the best join type for a query? Should we aspire to seeing nested loop joins in all our queries? Should we tremble with horror at the sight of a hash join?

Well, it depends. 🙂

There’s no single join type that’s best in every scenario and there’s no join type that’s bad to have in every scenario. If one of the join types, say the much maligned hash join, was very much a sub-optimal join type in every single scenario, then there would be no reason for it to be in the product and no reason for the optimiser to ever select it for a plan. Since there are three join types, and the optimiser can and does use all three, we must assume that they are all useful under some circumstances.

I took a look at the joins a while back, but it’s worth revisiting.

The nested loop join

A nested loop join is an optimal join type when two conditions are true.

- One of the resultsets contains quite a small number of rows.

- The other table has an index on the join column(s).

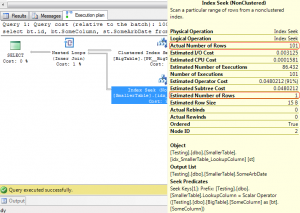

When both of these are true, SQL can do a very efficient nested loop. The smaller resultset becomes the outer table of the join, a loop runs across all the rows in that resultset and index seeks are done to look up the matching rows in the inner table. It’s important to note that the number of seeks against the inner table will not be less than the number of rows in the outer table, at the point the join occurs

If the one resultset has a small number of rows but there is no index on the other table on the join column, then a loop join can still be done, but is less optimal as the entire of the inner table (or a subset based on another filter condition) must be read on each iteration of the loop.

If both resultsets have large numbers of rows but there is an index on the join columns in one of the tables then the nested loop can still read through one of the resultsets and do index seeks to locate matching rows, but the number of rows in the outer table will mean lots and lots of seek operations, which may result in a sub-optimal plan.