I missed the Pass summit last November for a number of reasons, but I’m hoping I can attend this year. Given that, I submitted a number of abstracts for consideration.

The limits on number were as follows: Up to 4 abstracts for regular, spotlight or half-day sessions (ie main conference sessions) plus, providing some requirements are met, up to 2 abstracts for pre-con sessions.

I submitted five abstracts, four were ones I’ve been thinking about for some time, the last was a last minute surprise.

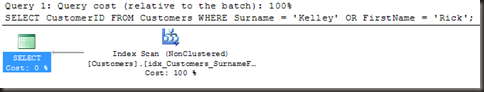

Bad plan! Sit!

Bad execution plans are the bane of database performance everywhere they crop up. But what is a bad execution plan? How do you identify one in your system and, once identified how do you go about fixing it?

In this top-rated session from the 24 Hours of PASS we’ll look at some things that make a plan ‘bad’, how you might detect such plans and various methods of fixing the problem, both immediately and long-term.

I wasn’t planning on submitting this one to be honest. It’s one I did for the 24 hours of Pass back in February, and I didn’t think it appropriate to redo at the Summit the same year. Some people at Pass disagreed with me on that. The session was one of the top 3 from 24 hours of Pass and as such got a guaranteed slot at Pass summit, and a chance for a Spotlight slot.

Is that a parameter I smell?

All too often a forum post on erratic query performance is met with a reply ‘Oh, it’s parameter sniffing. You can fix it with .’ The problem with that answer, even if it has identified the cause, is that it’s only part true. Parameter sniffing is not simply a problem that needs fixing, it’s an essential part of well-performing queries. In most cases.

Come to this session to learn what Parameter Sniffing really is and why it’s a good thing, most of the time. Learn how to identify the scenarios where it’s not good, why a feature that is supposed to improve query performance sometimes degrades it, and what your options are for resolving the problems when they do occur.

Performance improvements in 60 min or less. Guaranteed

The system is slow, users cry

It’s impacting our bottom line

In meetings the curses fly

The situation is far from fine

While up on the IT floor

The DBA tears his hair

He’ll soon be shown the door

If he cannot the performance repair,

It often seems, from looking at forum posts and client requests, that the steady-state of databases is ‘too slow’ and all too often the people who are tasked with resolving performance problems are overwhelmed by the shear scope of the problem, and aren’t really sure where to start.

In this demo-heavy session, we’ll look at a fictional company’s database and website and work through finding the worst offenders in terms of poor performance, identifying the causes of the problems and at least starting to get the queries to perform better and the users to stop phoning and complaining.

Yes, part of the abstract is written in verse. It’s something I was threatening to do since last year, and I decided that it shouldn’t harm my chances, much.

Dos and don’ts of database corruption

Database corruption is one of the worst things you can encounter as a DBA. It can result in downtime, data loss, and unhappy users. What’s scary about corruption is that it can strike out of the blue and with no warning. If maintenance is not being done regularly on the database it’s easy for corruption to go unnoticed until it’s too late to repair without losing data.

In this session we’ll look at

- Easy maintenance operations you should be running right now to ensure the fastest possible identification and resolution of corruption

- Best practices for handling a database that you suspect may be corrupted

- Common actions that can worsen the problem.

- Appropriate steps to take and methods of recovery

I did this session initially for Quest, for their Pain of the Week webcast back in February. I enjoyed doing it a lot, and it got some good feedback, so I decided to submit it for Summit as a contrast to my usual performance-related presentations.

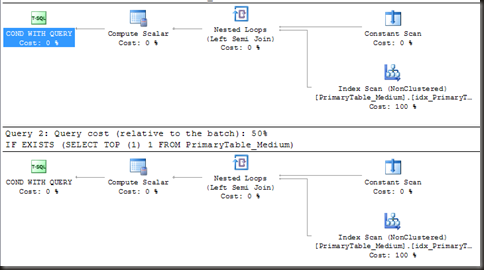

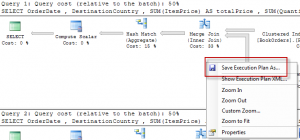

All about Execution Plans

Last, but far from least…

Grant Fritchey (blog | twitter) and I submitted a join pre-con session on execution plans. This is an area Grant is a well-known expert on, having written a book on it, and we’re hoping that we’ll be given the chance to devote a full day to this topic.

The key to understanding how SQL Server is processing your queries is the execution plan.

This full day session focuses on the execution plan. We will start right at the beginning and talk about the compile process. We’ll also go over how, and more importantly, why, plans are stored in cache and how they are removed.

We’ll spend time exploring the key differences between actual and estimated plans, and why those descriptions are more than a little misleading. We’ll also show you assorted methods to obtain a query’s execution plan and what the differences and tradeoffs of each are.

A full day class on execution plans would not be complete without spending time learning to reading them. You’ll learn where to find useful information in execution plans, what the common operators are and how to decipher the sometimes cryptic messages the plans are sending to you. We’ll also debunk some myths surrounding query operators and execution plans.

All of this is meant to further your understanding of how queries work in order to improve the queries you’re responsible for. With this in mind, we’ll show how you can use execution plans to tune queries. All of the information presented will be taken from real world examples. We’ll build on the information through the day so that at the end, after following us through multiple examples at your own computer, you’ll have a stronger understanding of how to read, interpret and actually use execution plans in your day-to-day job.