Recompiles

I’ve looked at cache hit and miss events and at the cache insert and remove events. The only cache-monitoring event (of current interest) left is the recompile event.

There are two recompile events available in Profiler in SQL 2008

- SP:Recompile under Stored Procedures

- SQL:StmtRecompile under T-SQL

Which to use when?

Books Online has the following to say on the two events.

The SQL:StmtRecompile event class indicates statement-level recompilations caused by all types of batches: stored procedures, triggers, ad hoc batches, and queries. Starting in SQL Server 2005, the SQL:StmtRecompile event class should be used instead of the SP:Recompile event class.

and

The SP:Recompile event class indicates that a stored procedure, trigger, or user-defined function has been recompiled. In SQL Server 2005 and later, recompilations reported by this event class occur at the statement level, whereas those in SQL Server 2000 occurred at the batch level.

In SQL Server 2005 and later, the preferred way to trace statement-level recompilations is to use the SQL:StmtRecompile event class. Starting in SQL Server 2005, the SP:Recompile event class is deprecated.

So it appears that they show the same thing and SP:Recompile is deprecated. That simplifies the entire situation, the only one that I’m going to look at in that case is the SQL:StmtRecompile event.

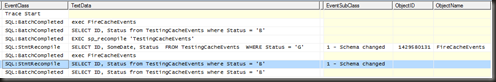

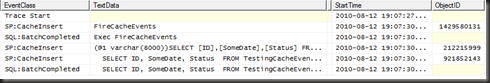

So what does the event look like?