Continuing with the mini-series on query operators, I want to have a look at NOT EXISTS and NOT IN.

Previous parts of this miniseries are:

Just one note before diving into that. The examples I’m using are fairly simplistic and that’s intentional. I’m trying to find what, if any, are the performance differences in a benchmark-style setup. I’ll have some comments on more complex examples in a later post.

The most important thing to note about NOT EXISTS and NOT IN is that, unlike EXISTS and IN, they are not equivalent in all cases. Specifically, when NULLs are involved they will return different results. To be totally specific, when the subquery returns even one null, NOT IN will not match any rows.

The reason for this can be found by looking at the details of what the NOT IN operation actually means.

Let’s say, for illustration purposes that there are 4 rows in the table called t, there’s a column called ID with values 1..4

1 | WHERE SomeValue NOT IN (SELECT AVal FROM t)

|

is equivalent to

1 2 3 4 5 6 7 8 9 | WHERE (

SomeValue != (SELECT AVal FROM t WHERE ID=1)

AND

SomeValue != (SELECT AVal FROM t WHERE ID=2)

AND

SomeValue != (SELECT AVal FROM t WHERE ID=3)

AND

SomeValue != (SELECT AVal FROM t WHERE ID=4)

)

|

Let’s further say that AVal is NULL where ID = 4. Hence that != comparison returns UNKNOWN. The logical truth table for AND states that UNKNOWN and TRUE is UNKNOWN, UNKNOWN and FALSE is FALSE. There is no value that can be AND’d with UNKNOWN to produce the result TRUE

Hence, if any row of that subquery returns NULL, the entire NOT IN operator will evaluate to either FALSE or UNKNOWN and no records will be returned

So what about EXISTS?

Exists cannot return NULL. It’s checking solely for the presence or absence of a row in the subquery and, hence, it can only return true or false. Since it cannot return NULL, there’s no possibility of a single NULL resulting in the entire expression evaluating to UNKNOWN.

Hence, when the column in the subquery that’s used for comparison with the outer table can have nulls in it, consider carefully which of NOT EXISTS or NOT IN you want to use.

Ok, but say there are no nulls in the column. How do they compare speed-wise. I’m going to do two tests, one where the columns involved in the comparison are defined as NULL and one where they are defined as NOT NULL. There will be no NULL values in the columns in either case. In both cases, the join columns will be indexed. After all, we all index our join columns, right?

So, first test, non-nullable columns. First some setup

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 | CREATE TABLE BigTable (

id INT IDENTITY PRIMARY KEY,

SomeColumn char(4) NOT NULL,

Filler CHAR(100)

)

CREATE TABLE SmallerTable (

id INT IDENTITY PRIMARY KEY,

LookupColumn char(4) NOT NULL,

SomeArbDate Datetime default getdate()

)

INSERT INTO BigTable (SomeColumn)

SELECT top 250000

char(65+FLOOR(RAND(a.column_id *5645 + b.object_id)*10)) + char(65+FLOOR(RAND(b.column_id *3784 + b.object_id)*12)) +

char(65+FLOOR(RAND(b.column_id *6841 + a.object_id)*12)) + char(65+FLOOR(RAND(a.column_id *7544 + b.object_id)*8))

from master.sys.columns a cross join master.sys.columns b

INSERT INTO SmallerTable (LookupColumn)

SELECT DISTINCT SomeColumn

FROM BigTable TABLESAMPLE (25 PERCENT)

CREATE INDEX idx_BigTable_SomeColumn

ON BigTable (SomeColumn)

CREATE INDEX idx_SmallerTable_LookupColumn

ON SmallerTable (LookupColumn)

|

Then the queries

1 2 3 4 5 6 7 | SELECT ID, SomeColumn FROM BigTable

WHERE SomeColumn NOT IN (SELECT LookupColumn FROM SmallerTable)

SELECT ID, SomeColumn FROM BigTable

WHERE NOT EXISTS (SELECT LookupColumn FROM SmallerTable WHERE SmallerTable.LookupColumn = BigTable.SomeColumn)

|

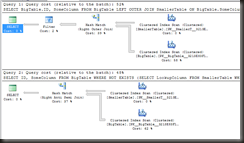

The first thing to note is that the execution plans are identical.

The execution characteristics are also identical.

Query 1

Table ‘BigTable’. Scan count 1, logical reads 342, physical reads 0.

Table ‘SmallerTable’. Scan count 1, logical reads 8, physical reads 0.

SQL Server Execution Times:

CPU time = 156 ms, elapsed time = 221 ms.

Query 2

Table ‘BigTable’. Scan count 1, logical reads 342, physical reads 0.

Table ‘SmallerTable’. Scan count 1, logical reads 8, physical reads 0.

SQL Server Execution Times:

CPU time = 156 ms, elapsed time = 247 ms.

So, at least for the case where the columns are defined as NOT NULL, these two perform the same.

What about the case where the columns are defined as nullable? I’m going to simply alter the two columns involved without changing anything else, then test out the two queries again.

1 2 3 4 5 | ALTER TABLE BigTable

ALTER COLUMN SomeColumn char(4) NULL

ALTER TABLE SmallerTable

ALTER COLUMN LookupColumn char(4) NULL

|

And the same two queries

1 2 3 4 5 6 7 8 | SELECT ID, SomeColumn FROM BigTable

WHERE SomeColumn NOT IN (SELECT LookupColumn FROM SmallerTable)

SELECT ID, SomeColumn FROM BigTable

WHERE NOT EXISTS (SELECT LookupColumn FROM SmallerTable WHERE SmallerTable.LookupColumn = BigTable.SomeColumn)

|

And as for their performance…

Query 1

Table ‘SmallerTable’. Scan count 3, logical reads 500011, physical reads 0.

Table ‘BigTable’. Scan count 1, logical reads 437, physical reads 0.

SQL Server Execution Times:

CPU time = 827 ms, elapsed time = 825 ms.

Query 2

Table ‘BigTable’. Scan count 1, logical reads 437, physical reads 0.

Table ‘SmallerTable’. Scan count 1, logical reads 9, physical reads 0.

SQL Server Execution Times:

CPU time = 156 ms, elapsed time = 228 ms.

Radically different execution plans, radically different performance characteristics. The NOT IN took over 5 times longer to execute and did thousands of times more reads.

Why is that complex execution plan required when there may be nulls in the column? I can’t answer that one, probably only one of the query optimiser developers can, however the results are obvious. When the columns allow nulls but has none, the NOT IN performs significantly worse than NOT EXISTS.

So, take-aways from this?

Most importantly, NOT EXISTS and NOT IN do not have the same behaviour when there are NULLs involved. Chose carefully which you want.

Columns that will never contain NULL values should be defined as NOT NULL so that SQL knows there will never be NULL values in them and so that it doesn’t have to produce complex plans to handle potential nulls.

On non-nullable columns, the behaviour and performance of NOT IN and NOT EXISTS are the same, so use whichever one works better for the specific situation.

One more to go on this: LEFT OUTER JOIN with the IS NULL check vs NOT IN