There is a particularly irritating and persistent belief that indexes (usually it’s the clustered that gets picked on) are always physically ordered within the data file by the key columns. That is, that the data within the database file is always ordered by the key column.

It doesn’t help that official documentation states this ‘fact’.

I’m going to diverge from my usual methodology of first proving (or disproving) a statement and then explaining it in this case.

Do indexes (clustered or non-clustered) define the physical storage order of the rows?

No, absolutely not.

What indexes do is provide a logical ordering, a collection of pointers, that allow the storage engine to retrieve data from an index ordered by the index key, but that’s logical ordering, it specified nothing regarding the physical ordering.

The index structure is such that the page with key values 4, 5 and 6 will appear earlier in the index’s logical ordering than the page with key values 10,11 and 12. Where these pages are in the file is not defined at all. The page with key values 10,11 and 12 could be page 240 in the database file while the page with key values 4, 5 and 6 could be page 655.

On the data pages themselves there’s no guarantee that the row with the key value 4 will appear earlier on the page than the row with the key value of 6. 6 could be the first row on the page and 4 last and that would be just fine.

Let’s prove this. Time for DBCC page and some undocumented commands.

First up, the order of rows on the page. I’m going to create a table in a nice new database (so that there are no other tables around messing things up) and populate it with some data.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 | CREATE TABLE OddandEven (

SomeNumber INT,

Filler CHAR(500) DEFAULT ' '

) ;

GO

CREATE UNIQUE CLUSTERED INDEX idx_SomeNumber ON OddandEven (SomeNumber);

GO

INSERT INTO OddandEven (SomeNumber)

SELECT TOP (50) (ROW_NUMBER() OVER (ORDER BY object_id))*2 - 1 FROM sys.objects;

INSERT INTO OddandEven (SomeNumber)

SELECT TOP (50) (ROW_NUMBER() OVER (ORDER BY object_id))*2 FROM sys.objects;

|

So what I’m doing there is simply inserting 50 odd numbers first and 50 even numbers second

A quick check with DBCC IND shows me that page 89 of this database is a data page for this table. I’m going to use dump style 2 for DBCC Page, because I want a raw binary dump with no interpretation (I’m removing the portions that are just the filler, as that’s just intentionally wasted space)

000000000EB6AC50: 20020000 1000fb01 37332020 20202020 † …..û.73

000000000EB6AE40: 20202020 20202020 20202020 20202002 † .

000000000EB6AE50: 00001000 fb013735 20202020 20202020 †….û.75

000000000EB6AE60: 20202020 20202020 20202020 20202020 †

000000000EB6B040: 20202020 20202020 20202020 20020000 † …

000000000EB6B050: 1000fb01 36342020 20202020 20202020 †..û.64

000000000EB6B060: 20202020 20202020 20202020 20202020 †

000000000EB6B240: 20202020 20202020 20202002 00001000 † …..

000000000EB6B250: fb013636 20202020 20202020 20202020 †û.66

000000000EB6B260: 20202020 20202020 20202020 20202020 †

Hmm… 73, 75, 64, 66. That’s not the correct physical ordering… What happened here is that I inserted the odd values first, they were written to the pages then when I wrote the even numbers the pages had to split (firstly) leaving them probably around 50% full, then the even numbers were added in the empty space. SQL doesn’t reorder the rows on the page (that would be expensive).

What keeps track of the logical ordering, what rows should be read first, second, etc. to get the results back in logical ordering, is the slot array at the end of the page

OFFSET TABLE:

Row - Offset

14 (0xe) - 7236 (0x1c44)

13 (0xd) - 3666 (0xe52)

12 (0xc) - 6726 (0x1a46)

11 (0xb) - 3156 (0xc54)

10 (0xa) - 6216 (0x1848)

9 (0x9) - 2646 (0xa56)

8 (0x8) - 5706 (0x164a)

7 (0x7) - 2136 (0x858)

6 (0x6) - 1626 (0x65a)

5 (0x5) - 5196 (0x144c)

4 (0x4) - 1116 (0x45c)

3 (0x3) - 4686 (0x124e)

2 (0x2) - 606 (0x25e)

1 (0x1) - 4176 (0x1050)

0 (0x0) - 96 (0x60)

That tells me that the row with the lowest key value is found at offset 0x60, the next lowest at offset 0x1050, then 0x25e, etc. The rows are not stored on this page in physical order, the slot array defines the logical order so that anything needing the rows in logical order of the index, can read them off the page that way.

That answers the question about rows on a page. Let’s now look at whether pages are always stored in physical order within the data file.

I’m going to drop the OddandEven table and create a new table with the rows sized so that only a few rows fit onto a page.

01 02 03 04 05 06 07 08 09 10 11 12 13 14 15 16 17 | CREATE TABLE PagePhysicalOrder (

SomeNumber INT,

Filler CHAR(800) DEFAULT ' '

);

CREATE UNIQUE CLUSTERED INDEX idx_TestingPhysicalOrder ON PagePhysicalOrder (SomeNumber)

DECLARE @i INT = 9;

WHILE @i >= 0

BEGIN

INSERT INTO dbo.PagePhysicalOrder (SomeNumber, Filler)

SELECT TOP (10)

ROW_NUMBER() OVER (ORDER BY (SELECT 1)) +@i*10,''

FROM sys.objects;

SET @i = @i - 1;

END

|

That gets me 100 rows in the table, written in groups of 10, with the higher values for SomeNumber being inserted first. Now, to find where the rows are stored, I’m going to use the sys.fn_PhysLocFormatter function and the %%physloc%% virtual column. See http://www.sqlskills.com/blogs/paul/sql-server-2008-new-undocumented-physical-row-locator-function/ for more details on these.

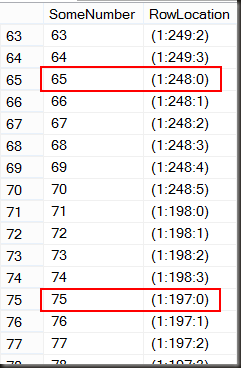

1 2 3 | SELECT SomeNumber,

sys.fn_PhysLocFormatter(%%physloc%%) AS RowLocation

FROM dbo.PagePhysicalOrder

|

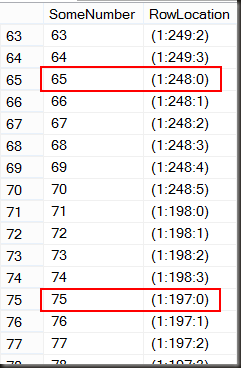

The output of the PhysLocFormatter is FileID : Page Number : Slot Index. The output shows the rows with SomeNumber 75, 76, 77 and a few others are on page 197 while rows with a lower SomeNumber (65-70) are on page 248, further into the data file than the page containing the larger values of SomeNumber.

Hence we can say that the clustered index doesn’t enforce the physical order of the pages in the data file either.

The only thing that the clustered index (or nonclustered indexes) enforce is what values belong on a page together. If we have a table with an index on an integer column, we cannot have a situation where rows with a key value of 1, 2, 4, 8, 9 are on one page and rows with a key value of 3, 5, 6, 7 and 10 are on another. If only 5 rows fit onto a page, one page will have 1, 2, 3, 4 and 5 and another page will have 6, 7, 8, 9 and 10. The physical order of the rows on those pages is irrelevant, as is the physical order of those two pages in the data file.

I suspect this myth came about because, when SQL creates or rebuilds an index, it will try as best as possible to put the pages of the index down in physical order of the index key. Doing so reduces logical fragmentation and allows the read-ahead reads to work as efficiently as possible. This applies only when the index is created, rebuilt or reorganised, not during regular operations.