All about Execution Plans

Coming to the PASS Summit in October this year? Excellent!

I say excellent, because Grant (blog|twitter) and I are presenting a full-day seminar on the Monday, all about execution plans. Excited already? If not, let me give you a taste of what we’ll be discussing.

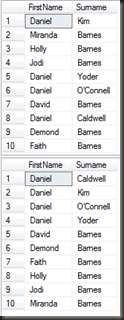

Grant will be kicking things off with a look at how the optimiser works. Not 500 level deep internals (that’d take all day by itself), but an overview of how the optimiser turns this

SELECT t.Title, u.UserName, u.UserLevel, t.LastModified, t.LastPoster FROM Threads t INNER JOIN Posts p on t.ThreadID = p.ThreadID INNER JOIN Users u on p.Poster = u.UserID WHERE t.ThreadID = 42

into this

as well as some DMVs that give a view on what the optimiser is doing.

After that, I’ll be digging into what the plan cache is, how it’s structured and how plans are matched to incoming queries. I’ll also cover how you might go about monitoring SQL’s usage of the cache.

After that we’ll discuss the myriad and varied ways to get execution plans out of SQL and what the various different options for that return and what some of the terms involved really mean (estimated execution plans anyone?).

Once all that’s out there (which will probably take the entire morning) it’ll be onto the fun of reading the execution plans, what those various icons represent and what all their arcane properties are telling you. Maybe, just maybe we’ll also have a look at the raw XML form of the plan, just for fun.

And because theory’s not very useful without something to apply it to, we’ll be ending off the day by seeing how you can use the information from the execution plan to tune your queries. After all, everyone wants better performance, right?

Still not completely convinced? Then check out the 24 hours of PASS coming in September. Both Grant and I are doing a pre-con preview. I’m doing extracting execution plans and Grant’s doing a portion of the reading execution plans section.

Right, now that everyone’s dying to attend this, I’m going to toss out a little bit of a challenge. If you’re coming to our precon and you have an execution plan that you don’t understand (maybe a strange operator, maybe a property with a value you’ve never seen), mail it to me (gail@<blog domain>) with a note as to what you want clarifying and we might discuss it during the pre-con. Some rules, just to keep things sane

- No confidential stuff. I don’t want legal trouble, neither do you.

- If the exec plan takes 5 minutes to open in SSMS, I’m deleting it.

- If it’s multiple pages in SSMS, I’m deleting it.

- I don’t promise to look at anyone’s plans, it depends how we’re doing on time.