This one comes up a lot on the forums, often as advice given…

“You should use integers for the key rather than strings. The joins will be faster.”

It sounds logical. Surely integers are easier to compare than large complex strings. Question is, is it true?

This is going to take lots and lots of rows to get any significant results. I’m going to do two sets of tests. The first comparing query execution speed between string and integer data types on the join column while keeping the size of the join column the same between the two. This is to tell if there’s a difference just because of the data type.

The second test will have both the data type and the size of the join columns differing, while the total size of the table row will be kept the same. This is to answer the ‘string joins are slower because they are larger’ argument.

Test 1: Same key size, no indexes

The two tables have the same size join column – a bigint in one and a char(8) in the other.

SELECT t1.ID, t2.ID, t1.IntForeignKey, t2.SomeArbStatus FROM dbo.TestingJoinsInt t1 INNER JOIN dbo.LookupTableInt t2 ON t1.IntForeignKey = t2.ID GO SELECT t1.ID, t2.ID, t1.StrForeignKey, t2.SomeArbStatus FROM dbo.TestingJoinsString t1 INNER JOIN dbo.LookupTableString t2 ON t1.StrForeignKey = t2.ID GO

First up, a check of what Statistics IO and Statistics Time show for the two and whether there’s any difference in execution plan. (Full repo code is available for download, link at the end of the post)

Int joins

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0.

Table ‘TestingJoinsInt’. Scan count 1, logical reads 66036, physical reads 0.

Table ‘LookupTableInt’. Scan count 1, logical reads 735, physical reads 0.SQL Server Execution Times:

CPU time = 2433 ms, elapsed time = 32574 ms.

String joins

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0.

Table ‘TestingJoinsString’. Scan count 1, logical reads 66036, physical reads 0.

Table ‘LookupTableString’. Scan count 1, logical reads 735, physical reads 0.SQL Server Execution Times:

CPU time = 3744 ms, elapsed time = 33947 ms.

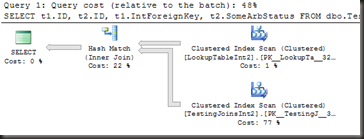

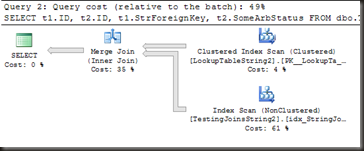

Execution plan’s the same, but that shouldn’t really be a surprise. With no nonclustered indexes there have to be table scans (or clustered index scan) and, with the resultsets not ordered by the join key a hash join is about the only join that could be used efficiently here.

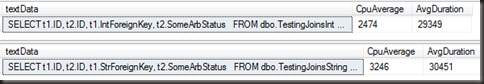

The CPU time is the interesting thing. 35% more CPU time from the string join. To check that the difference is consistent and not a once off, I’m going to run the same test 10 times each and use Profiler to catch the durations and CPU times and aggregate.

That’s a notable difference in the average CPU usage. Average of 31% greater CPU usage from the string join over the integer join.

Maybe an index will fix things…

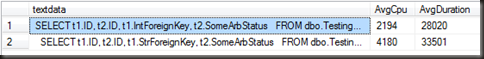

Test 2: Same key size, indexes on join column

Same tables, just with a nonclustered index added on the foreign key column.

Int joins

Table ‘TestingJoinsInt’. Scan count 1, logical reads 4654.

Table ‘LookupTableInt’. Scan count 1, logical reads 735.SQL Server Execution Times:

CPU time = 2043 ms, elapsed time = 30993 ms.

String joins:

Table ‘TestingJoinsString’. Scan count 1, logical reads 4654.

Table ‘LookupTableString’. Scan count 1, logical reads 735.SQL Server Execution Times:

CPU time = 2995 ms, elapsed time = 32904 ms.

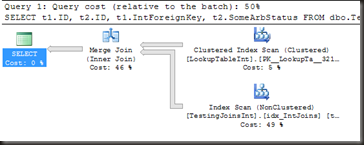

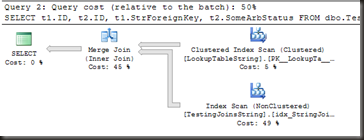

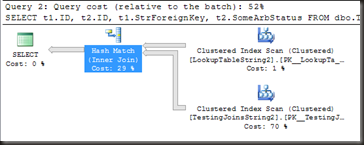

The one scan has changed to an index scan and the join type is now merge join (as the indexes provide the join order), but the plan still has the same form (as would be expected) and there’s still a fairly substantial difference in the CPU times.

On average a 50% increase in CPU time. That’s pretty extreme.

Test 3: Same row size, no indexes

For this test, the join columns are now different sizes. (This is the second script in the attached repo code for anyone using that) I’m using an int in the one table and a char(24) in the other. This is probably a little more realistic, if strings are being used as keys and join columns, there’s a very good chance that it will be longer than if an int was used.

Straight into the testing, the query’s the same form, the names of the tables are the only things that changed.

SELECT t1.ID, t2.ID, t1.IntForeignKey, t2.SomeArbStatus FROM dbo.TestingJoinsInt2 t1 INNER JOIN dbo.LookupTableInt2 t2 ON t1.IntForeignKey = t2.ID GO SELECT t1.ID, t2.ID, t1.StrForeignKey, t2.SomeArbStatus FROM dbo.TestingJoinsString2 t1 INNER JOIN dbo.LookupTableString2 t2 ON t1.StrForeignKey = t2.ID GO

Int joins:

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0.

Table ‘TestingJoinsInt2’. Scan count 1, logical reads 64342, physical reads 0.

Table ‘LookupTableInt2’. Scan count 1, logical reads 685, physical reads 0.SQL Server Execution Times:

CPU time = 2293 ms, elapsed time = 30839 ms.

String joins:

Table ‘Worktable’. Scan count 0, logical reads 0, physical reads 0.

Table ‘TestingJoinsString2’. Scan count 1, logical reads 64342, physical reads 0.

Table ‘LookupTableString2’. Scan count 1, logical reads 688, physical reads 0.SQL Server Execution Times:

CPU time = 4290 ms, elapsed time = 36742 ms.

And we’re back to the same plan as in the first test – clustered index scans and hash join, for the same reasons. I seem to have messed up somewhere in trying to keep the tables the same size. Still, 3 reads difference on the lookup table is not really a large difference.

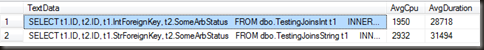

Just as in all the previous tests, the average CPU usage on the string join is markedly higher. This time it’s nearly 100% greater than for the int joins.

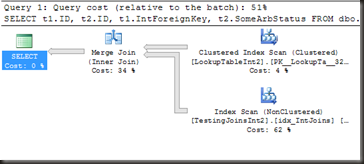

Test 4: Same row size, index on join column

In this test I’m breaking my own test rules a bit. While the table’s row size is the same between the two tables, the index row size is not. Still, I feel it’s fair enough as it reflects what would be done on a real system (no one pads out indexes for no reason)

Int joins:

Table ‘TestingJoinsInt2’. Scan count 1, logical reads 3407, physical reads 0.

Table ‘LookupTableInt2’. Scan count 1, logical reads 685, physical reads 0.SQL Server Execution Times:

CPU time = 2075 ms, elapsed time = 30459 ms.

String joins:

Table ‘TestingJoinsString2’. Scan count 1, logical reads 9625, physical reads 0.

Table ‘LookupTableString2’. Scan count 1, logical reads 688, physical reads 0.SQL Server Execution Times:

CPU time = 3775 ms, elapsed time = 34028 ms.

Same plan as the second test. I hope no one’s surprised by that.

And now we’re over a 100% increase in average CPU times for these two. The differing row sizes (with corresponding differing page counts) will be contributing to that, but just contributing, not causing, since we were seeing similar increases in earlier cases.

Conclusion

Is the use of integer data types better for join columns than strings? It certainly does appear so, and not insignificantly either. Bear in mind though that what I was doing here was a bit extreme. 2.5 million rows in a a query with no filters applied. This shouldn’t be something that ever gets done in a real system. So it’s not a case that YMMV1, it’s a case that it almost certainly will. You probably will see different results, but it is definitely something worth testing when planning data types for a DB

While this may not the final nail in the coffin for natural keys, it is worth keeping in mind when choosing between natural and artificial keys for a system, especially one likely to process large numbers of rows, such as a decision support/datawarehouse system. Test carefully with expected data volumes and expected loads if you’re considering natural keys and decide based on the result of those tests.

Repo code: Int vs String joins

(1) YMMV = ‘Your mileage may vary’, colloquialism that means that you may get a different result from me.

Very nice tests tks for that.

[]

Fabiano

Very good and useful analysis, I enjoyed reading it. It would be interesting to see the effect of int vs string joins on queries that return a smaller set of rows but that are executed much more often. Thanks.

One thing to consider is that regardless of the comparative CPU cost of joining 4-byte-ints vs. 20-plus-byte strings, a global truism is to keep columns as short as possible, *especially* those involved in the clustered index or other indexed columns. Even if the joins themselves were no more expensive in terms of CPU cycles, you’re just going to get fewer rows per page with wider columns (which = more reads to satisfy the same request). Never forget that the clustered index columns are repeated in every row of the nonclustered indexes…

Very interesting article. I’ve always ‘known’ ints to be faster than strings for joins, but never cared enough to really test it. Nice to have some supporting evidence. I’ve always subscribed to using IDENTITY surrogate keys and unique constraints to enforce any natural keys. I think the much more interesting metric would be the difference between using ints and guids (uniqueidentifier) as keys.

I’ve had several requests for additional tests (strings where the differences are at the beginning vs at the end, effects of collation), so I will revisit this in the future and do some additional experiments.

What about using a CS or BIN collation?

Adam Machanic mentioned the potential impact of other collations. I’ll test that another time.

This test was with the ‘SQL_Latin1_General_CP1_CI_AS’ collation.

Pingback: SQL University - Dimensions, It's All Meaningless Without Them | Made2Mentor

Fantastic! Like mattmc3, this is something I’ve always “known” this – but have never taken the time to quantify it!

Thanks!! this was indeed a Gila-Monster post.The was you have included the real-tests along with the theory is commendable!

Thanks for the article, very clearly explained the difference in performance between the two types of joins.

Was this run on an x86 or a x64 system? I would be interested to see if that made a difference.

x64. I don’t own a 32-bit machine anymore, I’d have to use a virtual to test that

Performance should only degrade further on a 32-bit machine. I was just faintly hoping natural keys still had a chance on x64 bit systems.

Great post, and good food for thought. I do occasionally use char(4) natural keys instead of ints as FKs, and have never wondered about the join issue. May want to think about collation next time I do that! Having said that, I’m actually impressed with how low the string manipulation overhead is, assuming it’s due to the case-insensitive compare.

Fantastic job Gail! Thanks

Awesome article Gail! I too am interested in int/bigint vs. guid and seeing those metrics. I love your myth busters approach.

Jim Murphy

SQLWatchmen

Big CPU differences – but smaller differences in elapsed time. Query Cost relative to batch percentage differences are also small.

This tells me that the CPU time of that particular part of the query isn’t the evil villian you are making it out to be. Wall-clock didn’t change much.

You have not convinced me that integer keys are preferable.

The server has a CPU for me to use – I want to utilize it. What I really don’t want to use is physical I/O – that’s tanks a database way more then an extra 800 milliseconds of cpu use…

Fine until you scale out and are CPU bound – To me, natural keys only make sense when they are ints or bigints. Then all JOINS are on bigints or ints. Consistent, faster, less CPU overhead. Clustered indexes (typically PKs) should be unique, small, and ever-increasing. Non-numeric natural keys rarely, if ever, fit these criteria for large tables.

With the size of data I was playing with, the majority of the elapsed time is going to be in displaying the data, and since the data volumes are the same the display times are going to be close.

Yes, the server has CPU to use, but not to waste. I want the CPU running as efficiently as possible so that I can put more useful work onto one server. Sure, 800ms of CPU is nothing, unless it’s 800ms extra on a query that runs 30 times a minute.

I specially crafted these tables so that the IO would be the same, so that I could focus on the CPU differences. In reality, that’s not going to be the case, in reality there will be a difference in IO between a large table with a 4-byte int and a large table with a 50-byte varchar, especially if the PK is also the cluster.

I achieved different results when comparing in this situation, where a cmopany uses IDENTITY INT PKs, but also has a Code varchar(10) unique column and a Name varchar(50) column. I used TABLESAMPLE (5 PERCENT) to grab a sizable junk of data from one of our regularly used tables. Then I did two test scenarios, one where I converted the Code varchar(10) to Code char(10), and the other leaving it as varchar. Then in each of the lookup tables (128 rows), I added the primary key clustered. Then in the data tables, I added an index for the Code with included columns for the PK, and a couple of dates. Then I did REBUILD on all the indexes I had just made, for safety. Then I executed each query ten times.

With int compared to varchar, the CPU usage was 45% query cost versus 55% query cost.

With int compared to char, the CPU usage was 48% query cost versus 52% query cost.

But in both examples the char/varchar query ran >= 20 ms faster. I noticed in the execution plans that the string joins used a merge join and the int join used a nested loop.

I have two theories for the CPU difference. One that the index map for the string result set is constructed more efficiently than for interegers- especially if the int result set varies in range like (1,2,3,4,5,10999,12093) from unit tests.

My other theory is that perhaps the string comparisons make better use of multi-core machines?

But in either case I always favor using real primary keys, instead of INT IDENTITY(1,1). If your data becomes corrupted or you want to setup for manual replication in the future, using a real primary key is much more helpful from my perspective.

Still, it was good to see you putting this out there. I wonder if I could reproduce the same results by expanding to varchar(24).

Thanks a lot, very nice experience.

If someone doesn’t want to lunch each query 10 time, juste :

– Execute

SET STATISTICS IO ON

SET STATISTICS TIME ON

– Replace “GO 10” by “GO”

Pingback: Avoid using complicated strings in Joins in SQL Server?